This is the multi-page printable view of this section. Click here to print.

Queries & Syntax

- 1: GROUP BY

- 1.1: GROUP BY tricks

- 2: Adjustable table partitioning

- 3: DateTime64

- 4: DISTINCT & GROUP BY & LIMIT 1 BY what the difference

- 5: Imprecise parsing of literal Decimal or Float64

- 6: Multiple aligned date columns in PARTITION BY expression

- 7: Row policies overhead (hiding 'removed' tenants)

- 8: Why is simple `SELECT count()` Slow in ClickHouse®?

- 9: Collecting query execution flamegraphs using system.trace_log

- 10: Using array functions to mimic window-functions alike behavior

- 11: -State & -Merge combinators

- 12: ALTER MODIFY COLUMN is stuck, the column is inaccessible.

- 13: ANSI SQL mode

- 14: Async INSERTs

- 15: Atomic insert

- 16: ClickHouse® Projections

- 17: Cumulative Anything

- 18: Data types on disk and in RAM

- 19: DELETE via tombstone column

- 20: EXPLAIN query

- 21: Fill missing values at query time

- 22: FINAL clause speed

- 23: Join with Calendar using Arrays

- 24: JOINs

- 24.1: JOIN optimization tricks

- 25: JSONExtract to parse many attributes at a time

- 26: KILL QUERY

- 27: Lag / Lead

- 28: Machine learning in ClickHouse

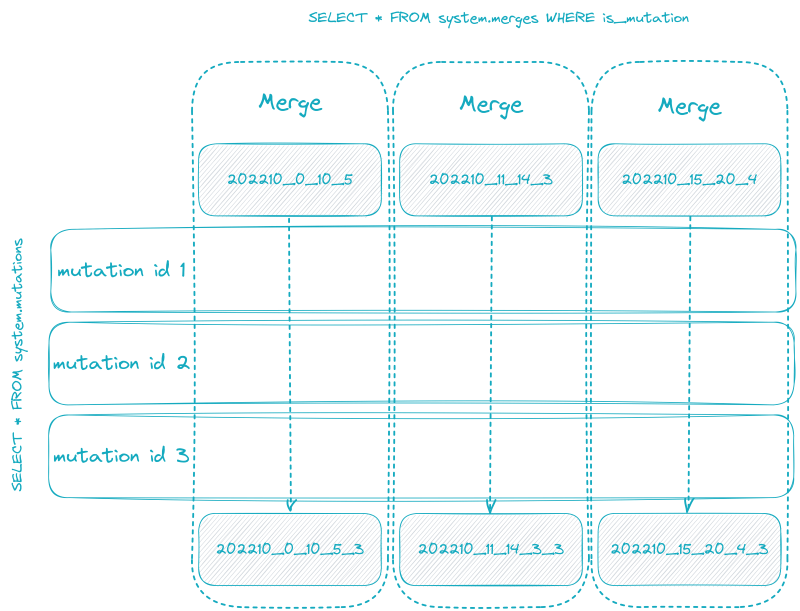

- 29: Mutations

- 30: OPTIMIZE vs OPTIMIZE FINAL

- 31: Parameterized views

- 32: Use both projection and raw data in single query

- 33: PIVOT / UNPIVOT

- 34: Possible deadlock avoided. Client should retry

- 35: Roaring bitmaps for calculating retention

- 36: SAMPLE by

- 37: Sampling Example

- 38: Simple aggregate functions & combinators

- 39: Skip indexes

- 39.1: Example: minmax

- 39.2: Skip index bloom_filter Example

- 39.3: Skip indexes examples

- 40: Time zones

- 41: Time-series alignment with interpolation

- 42: Top N & Remain

- 43: Troubleshooting

- 44: TTL

- 44.1: MODIFY (ADD) TTL in ClickHouse®

- 44.2: What are my TTL settings?

- 44.3: TTL GROUP BY Examples

- 44.4: TTL Recompress example

- 45: UPDATE via Dictionary

- 46: Values mapping

- 47: Window functions

1 - GROUP BY

Internal implementation

ClickHouse® uses non-blocking? hash tables, so each thread has at least one hash table.

It makes easier to not care about sync between multiple threads, but has such disadvantages as:

- Bigger memory usage.

- Needs to merge those per-thread hash tables afterwards.

Because second step can be a bottleneck in case of a really big GROUP BY with a lot of distinct keys, another solution has been made.

Two-Level

https://youtu.be/SrucFOs8Y6c?t=2132

┌─name───────────────────────────────┬─value────┬─changed─┬─description────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┬─min──┬─max──┬─readonly─┬─type───┐

│ group_by_two_level_threshold │ 100000 │ 0 │ From what number of keys, a two-level aggregation starts. 0 - the threshold is not set. │ ᴺᵁᴸᴸ │ ᴺᵁᴸᴸ │ 0 │ UInt64 │

│ group_by_two_level_threshold_bytes │ 50000000 │ 0 │ From what size of the aggregation state in bytes, a two-level aggregation begins to be used. 0 - the threshold is not set. Two-level aggregation is used when at least one of the thresholds is triggered. │ ᴺᵁᴸᴸ │ ᴺᵁᴸᴸ │ 0 │ UInt64 │

└────────────────────────────────────┴──────────┴─────────┴────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────┴──────┴──────┴──────────┴────────┘

In order to parallelize merging of hash tables, ie execute such merge via multiple threads, ClickHouse use two-level approach:

On the first step ClickHouse creates 256 buckets for each thread. (determined by one byte of hash function) On the second step ClickHouse can merge those 256 buckets independently by multiple threads.

GROUP BY in external memory

It utilizes a two-level group by and dumps those buckets on disk. And at the last stage ClickHouse will read those buckets from disk one by one and merge them. So you should have enough RAM to hold one bucket (1/256 of whole GROUP BY size).

optimize_aggregation_in_order GROUP BY

Usually it works slower than regular GROUP BY, because ClickHouse needs to read and process data in specific ORDER, which makes it much more complicated to parallelize reading and aggregating.

But it use much less memory, because ClickHouse can stream resultset and there is no need to keep it in memory.

Last item cache

ClickHouse saves value of previous hash calculation, just in case next value will be the same.

https://github.com/ClickHouse/ClickHouse/pull/5417 https://github.com/ClickHouse/ClickHouse/blob/808d9afd0f8110faba5ae027051bf0a64e506da3/src/Common/ColumnsHashingImpl.h#L40

StringHashMap

Actually uses 5 different hash tables

- For empty strings

- For strings < 8 bytes

- For strings < 16 bytes

- For strings < 24 bytes

- For strings > 24 bytes

SELECT count()

FROM

(

SELECT materialize('1234567890123456') AS key -- length(key) = 16

FROM numbers(1000000000)

)

GROUP BY key

Aggregator: Aggregation method: key_string

Elapsed: 8.888 sec. Processed 1.00 billion rows, 8.00 GB (112.51 million rows/s., 900.11 MB/s.)

SELECT count()

FROM

(

SELECT materialize('12345678901234567') AS key -- length(key) = 17

FROM numbers(1000000000)

)

GROUP BY key

Aggregator: Aggregation method: key_string

Elapsed: 9.089 sec. Processed 1.00 billion rows, 8.00 GB (110.03 million rows/s., 880.22 MB/s.)

SELECT count()

FROM

(

SELECT materialize('123456789012345678901234') AS key -- length(key) = 24

FROM numbers(1000000000)

)

GROUP BY key

Aggregator: Aggregation method: key_string

Elapsed: 9.134 sec. Processed 1.00 billion rows, 8.00 GB (109.49 million rows/s., 875.94 MB/s.)

SELECT count()

FROM

(

SELECT materialize('1234567890123456789012345') AS key -- length(key) = 25

FROM numbers(1000000000)

)

GROUP BY key

Aggregator: Aggregation method: key_string

Elapsed: 12.566 sec. Processed 1.00 billion rows, 8.00 GB (79.58 million rows/s., 636.67 MB/s.)

length

16 8.89 17 9.09 24 9.13 25 12.57

For what GROUP BY statement use memory

- Hash tables

It will grow with:

Amount of unique combinations of keys participated in GROUP BY

Size of keys participated in GROUP BY

- States of aggregation functions:

Be careful with function, which state can use unrestricted amount of memory and grow indefinitely:

- groupArray (groupArray(1000)())

- uniqExact (uniq,uniqCombined)

- quantileExact (medianExact) (quantile,quantileTDigest)

- windowFunnel

- groupBitmap

- sequenceCount (sequenceMatch)

- *Map

Why my GROUP BY eat all the RAM

run your query with

set send_logs_level='trace'Remove all aggregation functions from the query, try to understand how many memory simple GROUP BY will take.

One by one remove aggregation functions from query in order to understand which one is taking most of memory

1.1 - GROUP BY tricks

Tricks

Testing dataset

CREATE TABLE sessions

(

`app` LowCardinality(String),

`user_id` String,

`created_at` DateTime,

`platform` LowCardinality(String),

`clicks` UInt32,

`session_id` UUID

)

ENGINE = MergeTree

PARTITION BY toYYYYMM(created_at)

ORDER BY (app, user_id, session_id, created_at)

INSERT INTO sessions WITH

CAST(number % 4, 'Enum8(\'Orange\' = 0, \'Melon\' = 1, \'Red\' = 2, \'Blue\' = 3)') AS app,

concat('UID: ', leftPad(toString(number % 20000000), 8, '0')) AS user_id,

toDateTime('2021-10-01 10:11:12') + (number / 300) AS created_at,

CAST((number + 14) % 3, 'Enum8(\'Bat\' = 0, \'Mice\' = 1, \'Rat\' = 2)') AS platform,

number % 17 AS clicks,

generateUUIDv4() AS session_id

SELECT

app,

user_id,

created_at,

platform,

clicks,

session_id

FROM numbers_mt(1000000000)

0 rows in set. Elapsed: 46.078 sec. Processed 1.00 billion rows, 8.00 GB (21.70 million rows/s., 173.62 MB/s.)

┌─database─┬─table────┬─column─────┬─type───────────────────┬───────rows─┬─compressed_bytes─┬─compressed─┬─uncompressed─┬──────────────ratio─┬─codec─┐

│ default │ sessions │ session_id │ UUID │ 1000000000 │ 16065918103 │ 14.96 GiB │ 14.90 GiB │ 0.9958970223439835 │ │

│ default │ sessions │ user_id │ String │ 1000000000 │ 3056977462 │ 2.85 GiB │ 13.04 GiB │ 4.57968701896828 │ │

│ default │ sessions │ clicks │ UInt32 │ 1000000000 │ 1859359032 │ 1.73 GiB │ 3.73 GiB │ 2.151278979023993 │ │

│ default │ sessions │ created_at │ DateTime │ 1000000000 │ 1332089630 │ 1.24 GiB │ 3.73 GiB │ 3.0028009451586226 │ │

│ default │ sessions │ platform │ LowCardinality(String) │ 1000000000 │ 329702248 │ 314.43 MiB │ 956.63 MiB │ 3.042446801879252 │ │

│ default │ sessions │ app │ LowCardinality(String) │ 1000000000 │ 4825544 │ 4.60 MiB │ 956.63 MiB │ 207.87333386660654 │ │

└──────────┴──────────┴────────────┴────────────────────────┴────────────┴──────────────────┴────────────┴──────────────┴────────────────────┴───────┘

All queries and datasets are unique, so in different situations different hacks could work better or worse.

PreFilter values before GROUP BY

SELECT

user_id,

sum(clicks)

FROM sessions

WHERE created_at > '2021-11-01 00:00:00'

GROUP BY user_id

HAVING (argMax(clicks, created_at) = 16) AND (argMax(platform, created_at) = 'Rat')

FORMAT `Null`

<Debug> MemoryTracker: Peak memory usage (for query): 18.36 GiB.

SELECT

user_id,

sum(clicks)

FROM sessions

WHERE user_id IN (

SELECT user_id

FROM sessions

WHERE (platform = 'Rat') AND (clicks = 16) AND (created_at > '2021-11-01 00:00:00') -- So we will select user_id which could potentially match our HAVING clause in OUTER query.

) AND (created_at > '2021-11-01 00:00:00')

GROUP BY user_id

HAVING (argMax(clicks, created_at) = 16) AND (argMax(platform, created_at) = 'Rat')

FORMAT `Null`

<Debug> MemoryTracker: Peak memory usage (for query): 4.43 GiB.

Use Fixed-width data types instead of String

For example, you have 2 strings which has values in special form like this

‘ABX 1412312312313’

You can just remove the first 4 characters and convert the rest to UInt64

toUInt64(substr(‘ABX 1412312312313’,5))

And you packed 17 bytes in 8, more than 2 times the improvement of size!

SELECT

user_id,

sum(clicks)

FROM sessions

GROUP BY

user_id,

platform

FORMAT `Null`

Aggregator: Aggregation method: serialized

<Debug> MemoryTracker: Peak memory usage (for query): 28.19 GiB.

Elapsed: 7.375 sec. Processed 1.00 billion rows, 27.00 GB (135.60 million rows/s., 3.66 GB/s.)

WITH

CAST(user_id, 'FixedString(14)') AS user_fx,

CAST(platform, 'FixedString(4)') AS platform_fx

SELECT

user_fx,

sum(clicks)

FROM sessions

GROUP BY

user_fx,

platform_fx

FORMAT `Null`

Aggregator: Aggregation method: keys256

MemoryTracker: Peak memory usage (for query): 22.24 GiB.

Elapsed: 6.637 sec. Processed 1.00 billion rows, 27.00 GB (150.67 million rows/s., 4.07 GB/s.)

WITH

CAST(user_id, 'FixedString(14)') AS user_fx,

CAST(platform, 'Enum8(\'Rat\' = 1, \'Mice\' = 2, \'Bat\' = 0)') AS platform_enum

SELECT

user_fx,

sum(clicks)

FROM sessions

GROUP BY

user_fx,

platform_enum

FORMAT `Null`

Aggregator: Aggregation method: keys128

MemoryTracker: Peak memory usage (for query): 14.14 GiB.

Elapsed: 5.335 sec. Processed 1.00 billion rows, 27.00 GB (187.43 million rows/s., 5.06 GB/s.)

WITH

toUInt32OrZero(trim( LEADING '0' FROM substr(user_id,6))) AS user_int,

CAST(platform, 'Enum8(\'Rat\' = 1, \'Mice\' = 2, \'Bat\' = 0)') AS platform_enum

SELECT

user_int,

sum(clicks)

FROM sessions

GROUP BY

user_int,

platform_enum

FORMAT `Null`

Aggregator: Aggregation method: keys64

MemoryTracker: Peak memory usage (for query): 10.14 GiB.

Elapsed: 8.549 sec. Processed 1.00 billion rows, 27.00 GB (116.97 million rows/s., 3.16 GB/s.)

WITH

toUInt32('1' || substr(user_id,6)) AS user_int,

CAST(platform, 'Enum8(\'Rat\' = 1, \'Mice\' = 2, \'Bat\' = 0)') AS platform_enum

SELECT

user_int,

sum(clicks)

FROM sessions

GROUP BY

user_int,

platform_enum

FORMAT `Null`

Aggregator: Aggregation method: keys64

Peak memory usage (for query): 10.14 GiB.

Elapsed: 6.247 sec. Processed 1.00 billion rows, 27.00 GB (160.09 million rows/s., 4.32 GB/s.)

It can be especially useful when you tries to do GROUP BY lc_column_1, lc_column_2 and ClickHouse® falls back to serialized algorithm.

Two LowCardinality Columns in GROUP BY

SELECT

app,

sum(clicks)

FROM sessions

GROUP BY app

FORMAT `Null`

Aggregator: Aggregation method: low_cardinality_key_string

MemoryTracker: Peak memory usage (for query): 43.81 MiB.

Elapsed: 0.545 sec. Processed 1.00 billion rows, 5.00 GB (1.83 billion rows/s., 9.17 GB/s.)

SELECT

app,

platform,

sum(clicks)

FROM sessions

GROUP BY

app,

platform

FORMAT `Null`

Aggregator: Aggregation method: serialized -- Slowest method!

MemoryTracker: Peak memory usage (for query): 222.86 MiB.

Elapsed: 2.923 sec. Processed 1.00 billion rows, 6.00 GB (342.11 million rows/s., 2.05 GB/s.)

SELECT

CAST(app, 'FixedString(6)') AS app_fx,

CAST(platform, 'FixedString(4)') AS platform_fx,

sum(clicks)

FROM sessions

GROUP BY

app_fx,

platform_fx

FORMAT `Null`

Aggregator: Aggregation method: keys128

MemoryTracker: Peak memory usage (for query): 160.23 MiB.

Elapsed: 1.632 sec. Processed 1.00 billion rows, 6.00 GB (612.63 million rows/s., 3.68 GB/s.)

Split your query in multiple smaller queries and execute them one BY one

SELECT

user_id,

sum(clicks)

FROM sessions

GROUP BY

user_id,

platform

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 28.19 GiB.

Elapsed: 7.375 sec. Processed 1.00 billion rows, 27.00 GB (135.60 million rows/s., 3.66 GB/s.)

SELECT

user_id,

sum(clicks)

FROM sessions

WHERE (cityHash64(user_id) % 4) = 0

GROUP BY

user_id,

platform

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 8.16 GiB.

Elapsed: 2.910 sec. Processed 1.00 billion rows, 27.00 GB (343.64 million rows/s., 9.28 GB/s.)

Shard your data by one of common high cardinal GROUP BY key

So on each shard you will have 1/N of all unique combination and this will result in smaller hash tables.

Let’s create 2 distributed tables with different distribution: rand() and by user_id

CREATE TABLE sessions_distributed AS sessions

ENGINE = Distributed('distr-groupby', default, sessions, rand());

INSERT INTO sessions_distributed WITH

CAST(number % 4, 'Enum8(\'Orange\' = 0, \'Melon\' = 1, \'Red\' = 2, \'Blue\' = 3)') AS app,

concat('UID: ', leftPad(toString(number % 20000000), 8, '0')) AS user_id,

toDateTime('2021-10-01 10:11:12') + (number / 300) AS created_at,

CAST((number + 14) % 3, 'Enum8(\'Bat\' = 0, \'Mice\' = 1, \'Rat\' = 2)') AS platform,

number % 17 AS clicks,

generateUUIDv4() AS session_id

SELECT

app,

user_id,

created_at,

platform,

clicks,

session_id

FROM numbers_mt(1000000000);

CREATE TABLE sessions_2 ON CLUSTER 'distr-groupby'

(

`app` LowCardinality(String),

`user_id` String,

`created_at` DateTime,

`platform` LowCardinality(String),

`clicks` UInt32,

`session_id` UUID

)

ENGINE = MergeTree

PARTITION BY toYYYYMM(created_at)

ORDER BY (app, user_id, session_id, created_at);

CREATE TABLE sessions_distributed_2 AS sessions

ENGINE = Distributed('distr-groupby', default, sessions_2, cityHash64(user_id));

INSERT INTO sessions_distributed_2 WITH

CAST(number % 4, 'Enum8(\'Orange\' = 0, \'Melon\' = 1, \'Red\' = 2, \'Blue\' = 3)') AS app,

concat('UID: ', leftPad(toString(number % 20000000), 8, '0')) AS user_id,

toDateTime('2021-10-01 10:11:12') + (number / 300) AS created_at,

CAST((number + 14) % 3, 'Enum8(\'Bat\' = 0, \'Mice\' = 1, \'Rat\' = 2)') AS platform,

number % 17 AS clicks,

generateUUIDv4() AS session_id

SELECT

app,

user_id,

created_at,

platform,

clicks,

session_id

FROM numbers_mt(1000000000);

SELECT

app,

platform,

sum(clicks)

FROM

(

SELECT

argMax(app, created_at) AS app,

argMax(platform, created_at) AS platform,

user_id,

argMax(clicks, created_at) AS clicks

FROM sessions_distributed

GROUP BY user_id

)

GROUP BY

app,

platform;

[chi-distr-groupby-distr-groupby-0-0-0] MemoryTracker: Current memory usage (for query): 12.02 GiB.

[chi-distr-groupby-distr-groupby-1-0-0] MemoryTracker: Current memory usage (for query): 12.05 GiB.

[chi-distr-groupby-distr-groupby-2-0-0] MemoryTracker: Current memory usage (for query): 12.05 GiB.

MemoryTracker: Peak memory usage (for query): 12.20 GiB.

12 rows in set. Elapsed: 28.345 sec. Processed 1.00 billion rows, 32.00 GB (35.28 million rows/s., 1.13 GB/s.)

SELECT

app,

platform,

sum(clicks)

FROM

(

SELECT

argMax(app, created_at) AS app,

argMax(platform, created_at) AS platform,

user_id,

argMax(clicks, created_at) AS clicks

FROM sessions_distributed_2

GROUP BY user_id

)

GROUP BY

app,

platform;

[chi-distr-groupby-distr-groupby-0-0-0] MemoryTracker: Current memory usage (for query): 5.05 GiB.

[chi-distr-groupby-distr-groupby-1-0-0] MemoryTracker: Current memory usage (for query): 5.05 GiB.

[chi-distr-groupby-distr-groupby-2-0-0] MemoryTracker: Current memory usage (for query): 5.05 GiB.

MemoryTracker: Peak memory usage (for query): 5.61 GiB.

12 rows in set. Elapsed: 11.952 sec. Processed 1.00 billion rows, 32.00 GB (83.66 million rows/s., 2.68 GB/s.)

SELECT

app,

platform,

sum(clicks)

FROM

(

SELECT

argMax(app, created_at) AS app,

argMax(platform, created_at) AS platform,

user_id,

argMax(clicks, created_at) AS clicks

FROM sessions_distributed_2

GROUP BY user_id

)

GROUP BY

app,

platform

SETTINGS optimize_distributed_group_by_sharding_key = 1

[chi-distr-groupby-distr-groupby-0-0-0] MemoryTracker: Current memory usage (for query): 5.05 GiB.

[chi-distr-groupby-distr-groupby-1-0-0] MemoryTracker: Current memory usage (for query): 5.05 GiB.

[chi-distr-groupby-distr-groupby-2-0-0] MemoryTracker: Current memory usage (for query): 5.05 GiB.

MemoryTracker: Peak memory usage (for query): 5.61 GiB.

12 rows in set. Elapsed: 11.916 sec. Processed 1.00 billion rows, 32.00 GB (83.92 million rows/s., 2.69 GB/s.)

SELECT

app,

platform,

sum(clicks)

FROM cluster('distr-groupby', view(

SELECT

app,

platform,

sum(clicks) as clicks

FROM

(

SELECT

argMax(app, created_at) AS app,

argMax(platform, created_at) AS platform,

user_id,

argMax(clicks, created_at) AS clicks

FROM sessions_2

GROUP BY user_id

)

GROUP BY

app,

platform

))

GROUP BY

app,

platform;

[chi-distr-groupby-distr-groupby-0-0-0] MemoryTracker: Current memory usage (for query): 5.05 GiB.

[chi-distr-groupby-distr-groupby-1-0-0] MemoryTracker: Current memory usage (for query): 5.05 GiB.

[chi-distr-groupby-distr-groupby-2-0-0] MemoryTracker: Current memory usage (for query): 5.05 GiB.

MemoryTracker: Peak memory usage (for query): 5.55 GiB.

12 rows in set. Elapsed: 9.491 sec. Processed 1.00 billion rows, 32.00 GB (105.36 million rows/s., 3.37 GB/s.)

Query with bigger state:

SELECT

app,

platform,

sum(last_click) as sum,

max(max_clicks) as max,

min(min_clicks) as min,

max(max_time) as max_time,

min(min_time) as min_time

FROM

(

SELECT

argMax(app, created_at) AS app,

argMax(platform, created_at) AS platform,

user_id,

argMax(clicks, created_at) AS last_click,

max(clicks) AS max_clicks,

min(clicks) AS min_clicks,

max(created_at) AS max_time,

min(created_at) AS min_time

FROM sessions_distributed

GROUP BY user_id

)

GROUP BY

app,

platform;

MemoryTracker: Peak memory usage (for query): 19.95 GiB.

12 rows in set. Elapsed: 34.339 sec. Processed 1.00 billion rows, 32.00 GB (29.12 million rows/s., 932.03 MB/s.)

SELECT

app,

platform,

sum(last_click) as sum,

max(max_clicks) as max,

min(min_clicks) as min,

max(max_time) as max_time,

min(min_time) as min_time

FROM

(

SELECT

argMax(app, created_at) AS app,

argMax(platform, created_at) AS platform,

user_id,

argMax(clicks, created_at) AS last_click,

max(clicks) AS max_clicks,

min(clicks) AS min_clicks,

max(created_at) AS max_time,

min(created_at) AS min_time

FROM sessions_distributed_2

GROUP BY user_id

)

GROUP BY

app,

platform;

MemoryTracker: Peak memory usage (for query): 10.09 GiB.

12 rows in set. Elapsed: 13.220 sec. Processed 1.00 billion rows, 32.00 GB (75.64 million rows/s., 2.42 GB/s.)

SELECT

app,

platform,

sum(last_click) AS sum,

max(max_clicks) AS max,

min(min_clicks) AS min,

max(max_time) AS max_time,

min(min_time) AS min_time

FROM

(

SELECT

argMax(app, created_at) AS app,

argMax(platform, created_at) AS platform,

user_id,

argMax(clicks, created_at) AS last_click,

max(clicks) AS max_clicks,

min(clicks) AS min_clicks,

max(created_at) AS max_time,

min(created_at) AS min_time

FROM sessions_distributed_2

GROUP BY user_id

)

GROUP BY

app,

platform

SETTINGS optimize_distributed_group_by_sharding_key = 1;

MemoryTracker: Peak memory usage (for query): 10.09 GiB.

12 rows in set. Elapsed: 13.361 sec. Processed 1.00 billion rows, 32.00 GB (74.85 million rows/s., 2.40 GB/s.)

SELECT

app,

platform,

sum(last_click) AS sum,

max(max_clicks) AS max,

min(min_clicks) AS min,

max(max_time) AS max_time,

min(min_time) AS min_time

FROM

(

SELECT

argMax(app, created_at) AS app,

argMax(platform, created_at) AS platform,

user_id,

argMax(clicks, created_at) AS last_click,

max(clicks) AS max_clicks,

min(clicks) AS min_clicks,

max(created_at) AS max_time,

min(created_at) AS min_time

FROM sessions_distributed_2

GROUP BY user_id

)

GROUP BY

app,

platform

SETTINGS distributed_group_by_no_merge=2;

MemoryTracker: Peak memory usage (for query): 10.02 GiB.

12 rows in set. Elapsed: 9.789 sec. Processed 1.00 billion rows, 32.00 GB (102.15 million rows/s., 3.27 GB/s.)

SELECT

app,

platform,

sum(sum),

max(max),

min(min),

max(max_time) AS max_time,

min(min_time) AS min_time

FROM cluster('distr-groupby', view(

SELECT

app,

platform,

sum(last_click) AS sum,

max(max_clicks) AS max,

min(min_clicks) AS min,

max(max_time) AS max_time,

min(min_time) AS min_time

FROM

(

SELECT

argMax(app, created_at) AS app,

argMax(platform, created_at) AS platform,

user_id,

argMax(clicks, created_at) AS last_click,

max(clicks) AS max_clicks,

min(clicks) AS min_clicks,

max(created_at) AS max_time,

min(created_at) AS min_time

FROM sessions_2

GROUP BY user_id

)

GROUP BY

app,

platform

))

GROUP BY

app,

platform;

MemoryTracker: Peak memory usage (for query): 10.09 GiB.

12 rows in set. Elapsed: 9.525 sec. Processed 1.00 billion rows, 32.00 GB (104.98 million rows/s., 3.36 GB/s.)

SELECT

app,

platform,

sum(sessions)

FROM

(

SELECT

argMax(app, created_at) AS app,

argMax(platform, created_at) AS platform,

user_id,

uniq(session_id) as sessions

FROM sessions_distributed_2

GROUP BY user_id

)

GROUP BY

app,

platform

MemoryTracker: Peak memory usage (for query): 14.57 GiB.

12 rows in set. Elapsed: 37.730 sec. Processed 1.00 billion rows, 44.01 GB (26.50 million rows/s., 1.17 GB/s.)

SELECT

app,

platform,

sum(sessions)

FROM

(

SELECT

argMax(app, created_at) AS app,

argMax(platform, created_at) AS platform,

user_id,

uniq(session_id) as sessions

FROM sessions_distributed_2

GROUP BY user_id

)

GROUP BY

app,

platform

SETTINGS optimize_distributed_group_by_sharding_key = 1;

MemoryTracker: Peak memory usage (for query): 14.56 GiB.

12 rows in set. Elapsed: 37.792 sec. Processed 1.00 billion rows, 44.01 GB (26.46 million rows/s., 1.16 GB/s.)

SELECT

app,

platform,

sum(sessions)

FROM

(

SELECT

argMax(app, created_at) AS app,

argMax(platform, created_at) AS platform,

user_id,

uniq(session_id) as sessions

FROM sessions_distributed_2

GROUP BY user_id

)

GROUP BY

app,

platform

SETTINGS distributed_group_by_no_merge = 2;

MemoryTracker: Peak memory usage (for query): 14.54 GiB.

12 rows in set. Elapsed: 17.762 sec. Processed 1.00 billion rows, 44.01 GB (56.30 million rows/s., 2.48 GB/s.)

SELECT

app,

platform,

sum(sessions)

FROM cluster('distr-groupby', view(

SELECT

app,

platform,

sum(sessions) as sessions

FROM

(

SELECT

argMax(app, created_at) AS app,

argMax(platform, created_at) AS platform,

user_id,

uniq(session_id) as sessions

FROM sessions_2

GROUP BY user_id

)

GROUP BY

app,

platform))

GROUP BY

app,

platform

MemoryTracker: Peak memory usage (for query): 14.55 GiB.

12 rows in set. Elapsed: 17.574 sec. Processed 1.00 billion rows, 44.01 GB (56.90 million rows/s., 2.50 GB/s.)

Reduce number of threads

Because each thread uses an independent hash table, if you lower thread amount it will reduce number of hash tables as well and lower memory usage at the cost of slower query execution.

SELECT

user_id,

sum(clicks)

FROM sessions

GROUP BY

user_id,

platform

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 28.19 GiB.

Elapsed: 7.375 sec. Processed 1.00 billion rows, 27.00 GB (135.60 million rows/s., 3.66 GB/s.)

SET max_threads = 2;

SELECT

user_id,

sum(clicks)

FROM sessions

GROUP BY

user_id,

platform

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 13.26 GiB.

Elapsed: 62.014 sec. Processed 1.00 billion rows, 27.00 GB (16.13 million rows/s., 435.41 MB/s.)

UNION ALL

SELECT

user_id,

sum(clicks)

FROM sessions

GROUP BY

app,

user_id

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 24.19 GiB.

Elapsed: 5.043 sec. Processed 1.00 billion rows, 27.00 GB (198.29 million rows/s., 5.35 GB/s.)

SELECT

user_id,

sum(clicks)

FROM sessions WHERE app = 'Orange'

GROUP BY

user_id

UNION ALL

SELECT

user_id,

sum(clicks)

FROM sessions WHERE app = 'Red'

GROUP BY

user_id

UNION ALL

SELECT

user_id,

sum(clicks)

FROM sessions WHERE app = 'Melon'

GROUP BY

user_id

UNION ALL

SELECT

user_id,

sum(clicks)

FROM sessions WHERE app = 'Blue'

GROUP BY

user_id

FORMAT Null

MemoryTracker: Peak memory usage (for query): 7.99 GiB.

Elapsed: 2.852 sec. Processed 1.00 billion rows, 27.01 GB (350.74 million rows/s., 9.47 GB/s.)

aggregation_in_order

SELECT

user_id,

sum(clicks)

FROM sessions

WHERE app = 'Orange'

GROUP BY user_id

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 969.33 MiB.

Elapsed: 2.494 sec. Processed 250.09 million rows, 6.75 GB (100.27 million rows/s., 2.71 GB/s.)

SET optimize_aggregation_in_order = 1;

SELECT

user_id,

sum(clicks)

FROM sessions

WHERE app = 'Orange'

GROUP BY

app,

user_id

FORMAT `Null`

AggregatingInOrderTransform: Aggregating in order

MemoryTracker: Peak memory usage (for query): 169.24 MiB.

Elapsed: 4.925 sec. Processed 250.09 million rows, 6.75 GB (50.78 million rows/s., 1.37 GB/s.)

Reduce dimensions from GROUP BY with functions like sumMap, *Resample

One

SELECT

user_id,

toDate(created_at) AS day,

sum(clicks)

FROM sessions

WHERE (created_at >= toDate('2021-10-01')) AND (created_at < toDate('2021-11-01')) AND (app IN ('Orange', 'Red', 'Blue'))

GROUP BY

user_id,

day

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 50.74 GiB.

Elapsed: 22.671 sec. Processed 594.39 million rows, 18.46 GB (26.22 million rows/s., 814.41 MB/s.)

SELECT

user_id,

(toDate('2021-10-01') + date_diff) - 1 AS day,

clicks

FROM

(

SELECT

user_id,

sumResample(0, 31, 1)(clicks, toDate(created_at) - toDate('2021-10-01')) AS clicks_arr

FROM sessions

WHERE (created_at >= toDate('2021-10-01')) AND (created_at < toDate('2021-11-01')) AND (app IN ('Orange', 'Red', 'Blue'))

GROUP BY user_id

)

ARRAY JOIN

clicks_arr AS clicks,

arrayEnumerate(clicks_arr) AS date_diff

FORMAT `Null`

Peak memory usage (for query): 8.24 GiB.

Elapsed: 5.191 sec. Processed 594.39 million rows, 18.46 GB (114.50 million rows/s., 3.56 GB/s.)

Multiple

SELECT

user_id,

platform,

toDate(created_at) AS day,

sum(clicks)

FROM sessions

WHERE (created_at >= toDate('2021-10-01')) AND (created_at < toDate('2021-11-01')) AND (app IN ('Orange')) AND user_id ='UID: 08525196'

GROUP BY

user_id,

platform,

day

ORDER BY user_id,

day,

platform

FORMAT `Null`

Peak memory usage (for query): 29.50 GiB.

Elapsed: 8.181 sec. Processed 198.14 million rows, 6.34 GB (24.22 million rows/s., 775.14 MB/s.)

WITH arrayJoin(arrayZip(clicks_arr_lvl_2, range(3))) AS clicks_res

SELECT

user_id,

CAST(clicks_res.2 + 1, 'Enum8(\'Rat\' = 1, \'Mice\' = 2, \'Bat\' = 3)') AS platform,

(toDate('2021-10-01') + date_diff) - 1 AS day,

clicks_res.1 AS clicks

FROM

(

SELECT

user_id,

sumResampleResample(1, 4, 1, 0, 31, 1)(clicks, CAST(platform, 'Enum8(\'Rat\' = 1, \'Mice\' = 2, \'Bat\' = 3)'), toDate(created_at) - toDate('2021-10-01')) AS clicks_arr

FROM sessions

WHERE (created_at >= toDate('2021-10-01')) AND (created_at < toDate('2021-11-01')) AND (app IN ('Orange'))

GROUP BY user_id

)

ARRAY JOIN

clicks_arr AS clicks_arr_lvl_2,

range(31) AS date_diff

FORMAT `Null`

Peak memory usage (for query): 9.92 GiB.

Elapsed: 4.170 sec. Processed 198.14 million rows, 6.34 GB (47.52 million rows/s., 1.52 GB/s.)

WITH arrayJoin(arrayZip(clicks_arr_lvl_2, range(3))) AS clicks_res

SELECT

user_id,

CAST(clicks_res.2 + 1, 'Enum8(\'Rat\' = 1, \'Mice\' = 2, \'Bat\' = 3)') AS platform,

(toDate('2021-10-01') + date_diff) - 1 AS day,

clicks_res.1 AS clicks

FROM

(

SELECT

user_id,

sumResampleResample(1, 4, 1, 0, 31, 1)(clicks, CAST(platform, 'Enum8(\'Rat\' = 1, \'Mice\' = 2, \'Bat\' = 3)'), toDate(created_at) - toDate('2021-10-01')) AS clicks_arr

FROM sessions

WHERE (created_at >= toDate('2021-10-01')) AND (created_at < toDate('2021-11-01')) AND (app IN ('Orange'))

GROUP BY user_id

)

ARRAY JOIN

clicks_arr AS clicks_arr_lvl_2,

range(31) AS date_diff

WHERE clicks > 0

FORMAT `Null`

Peak memory usage (for query): 10.14 GiB.

Elapsed: 9.533 sec. Processed 198.14 million rows, 6.34 GB (20.78 million rows/s., 665.20 MB/s.)

SELECT

user_id,

CAST(plat + 1, 'Enum8(\'Rat\' = 1, \'Mice\' = 2, \'Bat\' = 3)') AS platform,

(toDate('2021-10-01') + date_diff) - 1 AS day,

clicks

FROM

(

WITH

(SELECT flatten(arrayMap(x -> range(3) AS platforms, range(31) as days))) AS platform_arr,

(SELECT flatten(arrayMap(x -> [x, x, x], range(31) as days))) AS days_arr

SELECT

user_id,

flatten(sumResampleResample(1, 4, 1, 0, 31, 1)(clicks, CAST(platform, 'Enum8(\'Rat\' = 1, \'Mice\' = 2, \'Bat\' = 3)'), toDate(created_at) - toDate('2021-10-01'))) AS clicks_arr,

platform_arr,

days_arr

FROM sessions

WHERE (created_at >= toDate('2021-10-01')) AND (created_at < toDate('2021-11-01')) AND (app IN ('Orange'))

GROUP BY user_id

)

ARRAY JOIN

clicks_arr AS clicks,

platform_arr AS plat,

days_arr AS date_diff

FORMAT `Null`

Peak memory usage (for query): 9.95 GiB.

Elapsed: 3.095 sec. Processed 198.14 million rows, 6.34 GB (64.02 million rows/s., 2.05 GB/s.)

SELECT

user_id,

CAST(plat + 1, 'Enum8(\'Rat\' = 1, \'Mice\' = 2, \'Bat\' = 3)') AS platform,

(toDate('2021-10-01') + date_diff) - 1 AS day,

clicks

FROM

(

WITH

(SELECT flatten(arrayMap(x -> range(3) AS platforms, range(31) as days))) AS platform_arr,

(SELECT flatten(arrayMap(x -> [x, x, x], range(31) as days))) AS days_arr

SELECT

user_id,

sumResampleResample(1, 4, 1, 0, 31, 1)(clicks, CAST(platform, 'Enum8(\'Rat\' = 1, \'Mice\' = 2, \'Bat\' = 3)'), toDate(created_at) - toDate('2021-10-01')) AS clicks_arr,

arrayFilter(x -> ((x.1) > 0), arrayZip(flatten(clicks_arr), platform_arr, days_arr)) AS result

FROM sessions

WHERE (created_at >= toDate('2021-10-01')) AND (created_at < toDate('2021-11-01')) AND (app IN ('Orange'))

GROUP BY user_id

)

ARRAY JOIN

result.1 AS clicks,

result.2 AS plat,

result.3 AS date_diff

FORMAT `Null`

Peak memory usage (for query): 9.93 GiB.

Elapsed: 4.717 sec. Processed 198.14 million rows, 6.34 GB (42.00 million rows/s., 1.34 GB/s.)

SELECT

user_id,

CAST(range % 3, 'Enum8(\'Rat\' = 0, \'Mice\' = 1, \'Bat\' = 2)') AS platform,

toDate('2021-10-01') + intDiv(range, 3) AS day,

clicks

FROM

(

WITH (

SELECT range(93)

) AS range_arr

SELECT

user_id,

sumResample(0, 93, 1)(clicks, ((toDate(created_at) - toDate('2021-10-01')) * 3) + toUInt8(CAST(platform, 'Enum8(\'Rat\' = 0, \'Mice\' = 1, \'Bat\' = 2)'))) AS clicks_arr,

range_arr

FROM sessions

WHERE (created_at >= toDate('2021-10-01')) AND (created_at < toDate('2021-11-01')) AND (app IN ('Orange'))

GROUP BY user_id

)

ARRAY JOIN

clicks_arr AS clicks,

range_arr AS range

FORMAT `Null`

Peak memory usage (for query): 8.24 GiB.

Elapsed: 4.838 sec. Processed 198.14 million rows, 6.36 GB (40.95 million rows/s., 1.31 GB/s.)

SELECT

user_id,

sumResampleResample(1, 4, 1, 0, 31, 1)(clicks, CAST(platform, 'Enum8(\'Rat\' = 1, \'Mice\' = 2, \'Bat\' = 3)'), toDate(created_at) - toDate('2021-10-01')) AS clicks_arr

FROM sessions

WHERE (created_at >= toDate('2021-10-01')) AND (created_at < toDate('2021-11-01')) AND (app IN ('Orange'))

GROUP BY user_id

FORMAT `Null`

Peak memory usage (for query): 5.19 GiB.

0 rows in set. Elapsed: 1.160 sec. Processed 198.14 million rows, 6.34 GB (170.87 million rows/s., 5.47 GB/s.)

ARRAY JOIN can be expensive

https://kb.altinity.com/altinity-kb-functions/array-like-memory-usage/

sumMap, *Resample

https://kb.altinity.com/altinity-kb-functions/resample-vs-if-vs-map-vs-subquery/

Play with two-level

Disable:

SET group_by_two_level_threshold = 0, group_by_two_level_threshold_bytes = 0;

From 22.4 ClickHouse can predict, when it make sense to initialize aggregation with two-level from start, instead of rehashing on fly. It can improve query time. https://github.com/ClickHouse/ClickHouse/pull/33439

GROUP BY in external memory

Slow!

Use hash function for GROUP BY keys

GROUP BY cityHash64(‘xxxx’)

Can lead to incorrect results as hash functions is not 1 to 1 mapping.

Performance bugs

https://github.com/ClickHouse/ClickHouse/issues/15005

https://github.com/ClickHouse/ClickHouse/issues/29131

https://github.com/ClickHouse/ClickHouse/issues/31120

https://github.com/ClickHouse/ClickHouse/issues/35096 Fixed in 22.7

2 - Adjustable table partitioning

In that example, partitioning is being calculated via MATERIALIZED column expression toDate(toStartOfInterval(ts, toIntervalT(...))), but partition id also can be generated on application side and inserted to ClickHouse® as is.

CREATE TABLE tbl

(

`ts` DateTime,

`key` UInt32,

`partition_key` Date MATERIALIZED toDate(toStartOfInterval(ts, toIntervalYear(1)))

)

ENGINE = MergeTree

PARTITION BY (partition_key, ignore(ts))

ORDER BY key;

SET send_logs_level = 'trace';

INSERT INTO tbl SELECT toDateTime(toDate('2020-01-01') + number) as ts, number as key FROM numbers(300);

Renaming temporary part tmp_insert_20200101-0_1_1_0 to 20200101-0_1_1_0

INSERT INTO tbl SELECT toDateTime(toDate('2021-01-01') + number) as ts, number as key FROM numbers(300);

Renaming temporary part tmp_insert_20210101-0_2_2_0 to 20210101-0_2_2_0

ALTER TABLE tbl

MODIFY COLUMN `partition_key` Date MATERIALIZED toDate(toStartOfInterval(ts, toIntervalMonth(1)));

INSERT INTO tbl SELECT toDateTime(toDate('2022-01-01') + number) as ts, number as key FROM numbers(300);

Renaming temporary part tmp_insert_20220101-0_3_3_0 to 20220101-0_3_3_0

Renaming temporary part tmp_insert_20220201-0_4_4_0 to 20220201-0_4_4_0

Renaming temporary part tmp_insert_20220301-0_5_5_0 to 20220301-0_5_5_0

Renaming temporary part tmp_insert_20220401-0_6_6_0 to 20220401-0_6_6_0

Renaming temporary part tmp_insert_20220501-0_7_7_0 to 20220501-0_7_7_0

Renaming temporary part tmp_insert_20220601-0_8_8_0 to 20220601-0_8_8_0

Renaming temporary part tmp_insert_20220701-0_9_9_0 to 20220701-0_9_9_0

Renaming temporary part tmp_insert_20220801-0_10_10_0 to 20220801-0_10_10_0

Renaming temporary part tmp_insert_20220901-0_11_11_0 to 20220901-0_11_11_0

Renaming temporary part tmp_insert_20221001-0_12_12_0 to 20221001-0_12_12_0

ALTER TABLE tbl

MODIFY COLUMN `partition_key` Date MATERIALIZED toDate(toStartOfInterval(ts, toIntervalDay(1)));

INSERT INTO tbl SELECT toDateTime(toDate('2023-01-01') + number) as ts, number as key FROM numbers(5);

Renaming temporary part tmp_insert_20230101-0_13_13_0 to 20230101-0_13_13_0

Renaming temporary part tmp_insert_20230102-0_14_14_0 to 20230102-0_14_14_0

Renaming temporary part tmp_insert_20230103-0_15_15_0 to 20230103-0_15_15_0

Renaming temporary part tmp_insert_20230104-0_16_16_0 to 20230104-0_16_16_0

Renaming temporary part tmp_insert_20230105-0_17_17_0 to 20230105-0_17_17_0

SELECT _partition_id, min(ts), max(ts), count() FROM tbl GROUP BY _partition_id ORDER BY _partition_id;

┌─_partition_id─┬─────────────min(ts)─┬─────────────max(ts)─┬─count()─┐

│ 20200101-0 │ 2020-01-01 00:00:00 │ 2020-10-26 00:00:00 │ 300 │

│ 20210101-0 │ 2021-01-01 00:00:00 │ 2021-10-27 00:00:00 │ 300 │

│ 20220101-0 │ 2022-01-01 00:00:00 │ 2022-01-31 00:00:00 │ 31 │

│ 20220201-0 │ 2022-02-01 00:00:00 │ 2022-02-28 00:00:00 │ 28 │

│ 20220301-0 │ 2022-03-01 00:00:00 │ 2022-03-31 00:00:00 │ 31 │

│ 20220401-0 │ 2022-04-01 00:00:00 │ 2022-04-30 00:00:00 │ 30 │

│ 20220501-0 │ 2022-05-01 00:00:00 │ 2022-05-31 00:00:00 │ 31 │

│ 20220601-0 │ 2022-06-01 00:00:00 │ 2022-06-30 00:00:00 │ 30 │

│ 20220701-0 │ 2022-07-01 00:00:00 │ 2022-07-31 00:00:00 │ 31 │

│ 20220801-0 │ 2022-08-01 00:00:00 │ 2022-08-31 00:00:00 │ 31 │

│ 20220901-0 │ 2022-09-01 00:00:00 │ 2022-09-30 00:00:00 │ 30 │

│ 20221001-0 │ 2022-10-01 00:00:00 │ 2022-10-27 00:00:00 │ 27 │

│ 20230101-0 │ 2023-01-01 00:00:00 │ 2023-01-01 00:00:00 │ 1 │

│ 20230102-0 │ 2023-01-02 00:00:00 │ 2023-01-02 00:00:00 │ 1 │

│ 20230103-0 │ 2023-01-03 00:00:00 │ 2023-01-03 00:00:00 │ 1 │

│ 20230104-0 │ 2023-01-04 00:00:00 │ 2023-01-04 00:00:00 │ 1 │

│ 20230105-0 │ 2023-01-05 00:00:00 │ 2023-01-05 00:00:00 │ 1 │

└───────────────┴─────────────────────┴─────────────────────┴─────────┘

SELECT count() FROM tbl WHERE ts > '2023-01-04';

Key condition: unknown

MinMax index condition: (column 0 in [1672758001, +Inf))

Selected 1/17 parts by partition key, 1 parts by primary key, 1/1 marks by primary key, 1 marks to read from 1 ranges

Spreading mark ranges among streams (default reading)

Reading 1 ranges in order from part 20230105-0_17_17_0, approx. 1 rows starting from 0

3 - DateTime64

Subtract fractional seconds

WITH toDateTime64('2021-09-07 13:41:50.926', 3) AS time

SELECT

time - 1,

time - 0.1 AS no_affect,

time - toDecimal64(0.1, 3) AS uncorrect_result,

time - toIntervalMillisecond(100) AS correct_result -- from 22.4

Query id: 696722bd-3c22-4270-babe-c6b124fee97f

┌──────────minus(time, 1)─┬───────────────no_affect─┬────────uncorrect_result─┬──────────correct_result─┐

│ 2021-09-07 13:41:49.926 │ 2021-09-07 13:41:50.926 │ 1970-01-01 00:00:00.000 │ 2021-09-07 13:41:50.826 │

└─────────────────────────┴─────────────────────────┴─────────────────────────┴─────────────────────────┘

WITH

toDateTime64('2021-03-03 09:30:00.100', 3) AS time,

fromUnixTimestamp64Milli(toInt64(toUnixTimestamp64Milli(time) + (1.25 * 1000))) AS first,

toDateTime64(toDecimal64(time, 3) + toDecimal64('1.25', 3), 3) AS second,

reinterpret(reinterpret(time, 'Decimal64(3)') + toDecimal64('1.25', 3), 'DateTime64(3)') AS third,

time + toIntervalMillisecond(1250) AS fourth, -- from 22.4

addMilliseconds(time, 1250) AS fifth -- from 22.4

SELECT

first,

second,

third,

fourth,

fifth

Query id: 176cd2e7-68bf-4e26-a492-63e0b5a87cc5

┌───────────────────first─┬──────────────────second─┬───────────────────third─┬──────────────────fourth─┬───────────────────fifth─┐

│ 2021-03-03 09:30:01.350 │ 2021-03-03 09:30:01.350 │ 2021-03-03 09:30:01.350 │ 2021-03-03 09:30:01.350 │ 2021-03-03 09:30:01.350 │

└─────────────────────────┴─────────────────────────┴─────────────────────────┴─────────────────────────┴─────────────────────────┘

SET max_threads=1;

Starting from 22.4

WITH

materialize(toDateTime64('2021-03-03 09:30:00.100', 3)) AS time,

time + toIntervalMillisecond(1250) AS fourth

SELECT count()

FROM numbers(100000000)

WHERE NOT ignore(fourth)

1 rows in set. Elapsed: 0.215 sec. Processed 100.03 million rows, 800.21 MB (464.27 million rows/s., 3.71 GB/s.)

WITH

materialize(toDateTime64('2021-03-03 09:30:00.100', 3)) AS time,

addMilliseconds(time, 1250) AS fifth

SELECT count()

FROM numbers(100000000)

WHERE NOT ignore(fifth)

1 rows in set. Elapsed: 0.208 sec. Processed 100.03 million rows, 800.21 MB (481.04 million rows/s., 3.85 GB/s.)

###########

WITH

materialize(toDateTime64('2021-03-03 09:30:00.100', 3)) AS time,

fromUnixTimestamp64Milli(reinterpretAsInt64(toUnixTimestamp64Milli(time) + (1.25 * 1000))) AS first

SELECT count()

FROM numbers(100000000)

WHERE NOT ignore(first)

1 rows in set. Elapsed: 0.370 sec. Processed 100.03 million rows, 800.21 MB (270.31 million rows/s., 2.16 GB/s.)

WITH

materialize(toDateTime64('2021-03-03 09:30:00.100', 3)) AS time,

fromUnixTimestamp64Milli(toUnixTimestamp64Milli(time) + toInt16(1.25 * 1000)) AS first

SELECT count()

FROM numbers(100000000)

WHERE NOT ignore(first)

1 rows in set. Elapsed: 0.256 sec. Processed 100.03 million rows, 800.21 MB (391.06 million rows/s., 3.13 GB/s.)

WITH

materialize(toDateTime64('2021-03-03 09:30:00.100', 3)) AS time,

toDateTime64(toDecimal64(time, 3) + toDecimal64('1.25', 3), 3) AS second

SELECT count()

FROM numbers(100000000)

WHERE NOT ignore(second)

1 rows in set. Elapsed: 2.240 sec. Processed 100.03 million rows, 800.21 MB (44.65 million rows/s., 357.17 MB/s.)

SET decimal_check_overflow=0;

WITH

materialize(toDateTime64('2021-03-03 09:30:00.100', 3)) AS time,

toDateTime64(toDecimal64(time, 3) + toDecimal64('1.25', 3), 3) AS second

SELECT count()

FROM numbers(100000000)

WHERE NOT ignore(second)

1 rows in set. Elapsed: 1.991 sec. Processed 100.03 million rows, 800.21 MB (50.23 million rows/s., 401.81 MB/s.)

WITH

materialize(toDateTime64('2021-03-03 09:30:00.100', 3)) AS time,

reinterpret(reinterpret(time, 'Decimal64(3)') + toDecimal64('1.25', 3), 'DateTime64(3)') AS third

SELECT count()

FROM numbers(100000000)

WHERE NOT ignore(third)

1 rows in set. Elapsed: 0.515 sec. Processed 100.03 million rows, 800.21 MB (194.39 million rows/s., 1.56 GB/s.)

SET decimal_check_overflow=0;

WITH

materialize(toDateTime64('2021-03-03 09:30:00.100', 3)) AS time,

reinterpret(reinterpret(time, 'Decimal64(3)') + toDecimal64('1.25', 3), 'DateTime64(3)') AS third

SELECT count()

FROM numbers(100000000)

WHERE NOT ignore(third)

1 rows in set. Elapsed: 0.281 sec. Processed 100.03 million rows, 800.21 MB (356.21 million rows/s., 2.85 GB/s.)

4 - DISTINCT & GROUP BY & LIMIT 1 BY what the difference

DISTINCT

SELECT DISTINCT number

FROM numbers_mt(100000000)

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 4.00 GiB.

0 rows in set. Elapsed: 18.720 sec. Processed 100.03 million rows, 800.21 MB (5.34 million rows/s., 42.75 MB/s.)

SELECT DISTINCT number

FROM numbers_mt(100000000)

SETTINGS max_threads = 1

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 4.00 GiB.

0 rows in set. Elapsed: 18.349 sec. Processed 100.03 million rows, 800.21 MB (5.45 million rows/s., 43.61 MB/s.)

SELECT DISTINCT number

FROM numbers_mt(100000000)

LIMIT 1000

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 21.56 MiB.

0 rows in set. Elapsed: 0.014 sec. Processed 589.54 thousand rows, 4.72 MB (43.08 million rows/s., 344.61 MB/s.)

SELECT DISTINCT number % 1000

FROM numbers_mt(1000000000)

LIMIT 1000

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 1.80 MiB.

0 rows in set. Elapsed: 0.005 sec. Processed 589.54 thousand rows, 4.72 MB (127.23 million rows/s., 1.02 GB/s.)

SELECT DISTINCT number % 1000

FROM numbers(1000000000)

LIMIT 1001

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 847.05 KiB.

0 rows in set. Elapsed: 0.448 sec. Processed 1.00 billion rows, 8.00 GB (2.23 billion rows/s., 17.88 GB/s.)

- Final distinct step is single threaded

- Stream resultset

GROUP BY

SELECT number

FROM numbers_mt(100000000)

GROUP BY number

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 4.04 GiB.

0 rows in set. Elapsed: 8.212 sec. Processed 100.00 million rows, 800.00 MB (12.18 million rows/s., 97.42 MB/s.)

SELECT number

FROM numbers_mt(100000000)

GROUP BY number

SETTINGS max_threads = 1

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 6.00 GiB.

0 rows in set. Elapsed: 19.206 sec. Processed 100.03 million rows, 800.21 MB (5.21 million rows/s., 41.66 MB/s.)

SELECT number

FROM numbers_mt(100000000)

GROUP BY number

LIMIT 1000

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 4.05 GiB.

0 rows in set. Elapsed: 4.852 sec. Processed 100.00 million rows, 800.00 MB (20.61 million rows/s., 164.88 MB/s.)

This query faster than first, because ClickHouse® doesn't need to merge states for all keys, only for first 1000 (based on LIMIT)

SELECT number % 1000 AS key

FROM numbers_mt(1000000000)

GROUP BY key

LIMIT 1000

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 3.15 MiB.

0 rows in set. Elapsed: 0.770 sec. Processed 1.00 billion rows, 8.00 GB (1.30 billion rows/s., 10.40 GB/s.)

SELECT number % 1000 AS key

FROM numbers_mt(1000000000)

GROUP BY key

LIMIT 1001

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 3.77 MiB.

0 rows in set. Elapsed: 0.770 sec. Processed 1.00 billion rows, 8.00 GB (1.30 billion rows/s., 10.40 GB/s.)

- Multi threaded

- Will return result only after completion of aggregation

LIMIT BY

SELECT number

FROM numbers_mt(100000000)

LIMIT 1 BY number

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 6.00 GiB.

0 rows in set. Elapsed: 39.541 sec. Processed 100.00 million rows, 800.00 MB (2.53 million rows/s., 20.23 MB/s.)

SELECT number

FROM numbers_mt(100000000)

LIMIT 1 BY number

SETTINGS max_threads = 1

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 6.01 GiB.

0 rows in set. Elapsed: 36.773 sec. Processed 100.03 million rows, 800.21 MB (2.72 million rows/s., 21.76 MB/s.)

SELECT number

FROM numbers_mt(100000000)

LIMIT 1 BY number

LIMIT 1000

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 10.56 MiB.

0 rows in set. Elapsed: 0.019 sec. Processed 589.54 thousand rows, 4.72 MB (30.52 million rows/s., 244.20 MB/s.)

SELECT number % 1000 AS key

FROM numbers_mt(1000000000)

LIMIT 1 BY key

LIMIT 1000

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 5.14 MiB.

0 rows in set. Elapsed: 0.008 sec. Processed 589.54 thousand rows, 4.72 MB (71.27 million rows/s., 570.16 MB/s.)

SELECT number % 1000 AS key

FROM numbers_mt(1000000000)

LIMIT 1 BY key

LIMIT 1001

FORMAT `Null`

MemoryTracker: Peak memory usage (for query): 3.23 MiB.

0 rows in set. Elapsed: 36.027 sec. Processed 1.00 billion rows, 8.00 GB (27.76 million rows/s., 222.06 MB/s.)

- Single threaded

- Stream resultset

- Can return arbitrary amount of rows per each key

5 - Imprecise parsing of literal Decimal or Float64

Decimal

SELECT

9.2::Decimal64(2) AS postgresql_cast,

toDecimal64(9.2, 2) AS to_function,

CAST(9.2, 'Decimal64(2)') AS cast_float_literal,

CAST('9.2', 'Decimal64(2)') AS cast_string_literal

┌─postgresql_cast─┬─to_function─┬─cast_float_literal─┬─cast_string_literal─┐

│ 9.2 │ 9.19 │ 9.19 │ 9.2 │

└─────────────────┴─────────────┴────────────────────┴─────────────────────┘

When we try to type cast 64.32 to Decimal128(2) the resulted value is 64.31.

When it sees a number with a decimal separator it interprets as Float64 literal (where 64.32 have no accurate representation, and actually you get something like 64.319999999999999999) and later that Float is casted to Decimal by removing the extra precision.

Workaround is very simple - wrap the number in quotes (and it will be considered as a string literal by query parser, and will be transformed to Decimal directly), or use postgres-alike casting syntax:

select cast(64.32,'Decimal128(2)') a, cast('64.32','Decimal128(2)') b, 64.32::Decimal128(2) c;

┌─────a─┬─────b─┬─────c─┐

│ 64.31 │ 64.32 │ 64.32 │

└───────┴───────┴───────┘

Float64

SELECT

toFloat64(15008753.) AS to_func,

toFloat64('1.5008753E7') AS to_func_scientific,

CAST('1.5008753E7', 'Float64') AS cast_scientific

┌──to_func─┬─to_func_scientific─┬────cast_scientific─┐

│ 15008753 │ 15008753.000000002 │ 15008753.000000002 │

└──────────┴────────────────────┴────────────────────┘

6 - Multiple aligned date columns in PARTITION BY expression

Alternative to doing that by minmax skip index .

CREATE TABLE part_key_multiple_dates

(

`key` UInt32,

`date` Date,

`time` DateTime,

`created_at` DateTime,

`inserted_at` DateTime

)

ENGINE = MergeTree

PARTITION BY (toYYYYMM(date), ignore(created_at, inserted_at))

ORDER BY (key, time);

INSERT INTO part_key_multiple_dates SELECT

number,

toDate(x),

now() + intDiv(number, 10) AS x,

x - (rand() % 100),

x + (rand() % 100)

FROM numbers(100000000);

SELECT count()

FROM part_key_multiple_dates

WHERE date > (now() + toIntervalDay(105));

┌─count()─┐

│ 8434210 │

└─────────┘

1 rows in set. Elapsed: 0.022 sec. Processed 11.03 million rows, 22.05 MB (501.94 million rows/s., 1.00 GB/s.)

SELECT count()

FROM part_key_multiple_dates

WHERE inserted_at > (now() + toIntervalDay(105));

┌─count()─┐

│ 9279818 │

└─────────┘

1 rows in set. Elapsed: 0.046 sec. Processed 11.03 million rows, 44.10 MB (237.64 million rows/s., 950.57 MB/s.)

SELECT count()

FROM part_key_multiple_dates

WHERE created_at > (now() + toIntervalDay(105));

┌─count()─┐

│ 9279139 │

└─────────┘

1 rows in set. Elapsed: 0.043 sec. Processed 11.03 million rows, 44.10 MB (258.22 million rows/s., 1.03 GB/s.)

7 - Row policies overhead (hiding 'removed' tenants)

No row policy

CREATE TABLE test_delete

(

tenant Int64,

key Int64,

ts DateTime,

value_a String

)

ENGINE = MergeTree

PARTITION BY toYYYYMM(ts)

ORDER BY (tenant, key, ts);

INSERT INTO test_delete

SELECT

number%5,

number,

toDateTime('2020-01-01')+number/10,

concat('some_looong_string', toString(number)),

FROM numbers(1e8);

INSERT INTO test_delete -- multiple small tenants

SELECT

number%5000,

number,

toDateTime('2020-01-01')+number/10,

concat('some_looong_string', toString(number)),

FROM numbers(1e8);

Q1) SELECT tenant, count() FROM test_delete GROUP BY tenant ORDER BY tenant LIMIT 6;

┌─tenant─┬──count()─┐

│ 0 │ 20020000 │

│ 1 │ 20020000 │

│ 2 │ 20020000 │

│ 3 │ 20020000 │

│ 4 │ 20020000 │

│ 5 │ 20000 │

└────────┴──────────┘

6 rows in set. Elapsed: 0.285 sec. Processed 200.00 million rows, 1.60 GB (702.60 million rows/s., 5.62 GB/s.)

Q2) SELECT uniq(value_a) FROM test_delete where tenant = 4;

┌─uniq(value_a)─┐

│ 20016427 │

└───────────────┘

1 row in set. Elapsed: 0.265 sec. Processed 20.23 million rows, 863.93 MB (76.33 million rows/s., 3.26 GB/s.)

Q3) SELECT max(ts) FROM test_delete where tenant = 4;

┌─────────────max(ts)─┐

│ 2020-04-25 17:46:39 │

└─────────────────────┘

1 row in set. Elapsed: 0.062 sec. Processed 20.23 million rows, 242.31 MB (324.83 million rows/s., 3.89 GB/s.)

Q4) SELECT max(ts) FROM test_delete where tenant = 4 and key = 444;

┌─────────────max(ts)─┐

│ 2020-01-01 00:00:44 │

└─────────────────────┘

1 row in set. Elapsed: 0.009 sec. Processed 212.99 thousand rows, 1.80 MB (24.39 million rows/s., 206.36 MB/s.)

row policy using expression

CREATE ROW POLICY pol1 ON test_delete USING tenant not in (1,2,3) TO all;

Q1) SELECT tenant, count() FROM test_delete GROUP BY tenant ORDER BY tenant LIMIT 6;

┌─tenant─┬──count()─┐

│ 0 │ 20020000 │

│ 4 │ 20020000 │

│ 5 │ 20000 │

│ 6 │ 20000 │

│ 7 │ 20000 │

│ 8 │ 20000 │

└────────┴──────────┘

6 rows in set. Elapsed: 0.333 sec. Processed 140.08 million rows, 1.12 GB (420.59 million rows/s., 3.36 GB/s.)

Q2) SELECT uniq(value_a) FROM test_delete where tenant = 4;

┌─uniq(value_a)─┐

│ 20016427 │

└───────────────┘

1 row in set. Elapsed: 0.287 sec. Processed 20.23 million rows, 863.93 MB (70.48 million rows/s., 3.01 GB/s.)

Q3) SELECT max(ts) FROM test_delete where tenant = 4;

┌─────────────max(ts)─┐

│ 2020-04-25 17:46:39 │

└─────────────────────┘

1 row in set. Elapsed: 0.080 sec. Processed 20.23 million rows, 242.31 MB (254.20 million rows/s., 3.05 GB/s.)

Q4) SELECT max(ts) FROM test_delete where tenant = 4 and key = 444;

┌─────────────max(ts)─┐

│ 2020-01-01 00:00:44 │

└─────────────────────┘

1 row in set. Elapsed: 0.011 sec. Processed 212.99 thousand rows, 3.44 MB (19.53 million rows/s., 315.46 MB/s.)

Q5) SELECT uniq(value_a) FROM test_delete where tenant = 1;

┌─uniq(value_a)─┐

│ 0 │

└───────────────┘

1 row in set. Elapsed: 0.008 sec. Processed 180.22 thousand rows, 1.44 MB (23.69 million rows/s., 189.54 MB/s.)

DROP ROW POLICY pol1 ON test_delete;

row policy using table subquery

create table deleted_tenants(tenant Int64) ENGINE=MergeTree order by tenant;

CREATE ROW POLICY pol1 ON test_delete USING tenant not in deleted_tenants TO all;

SELECT tenant, count() FROM test_delete GROUP BY tenant ORDER BY tenant LIMIT 6;

┌─tenant─┬──count()─┐

│ 0 │ 20020000 │

│ 1 │ 20020000 │

│ 2 │ 20020000 │

│ 3 │ 20020000 │

│ 4 │ 20020000 │

│ 5 │ 20000 │

└────────┴──────────┘

6 rows in set. Elapsed: 0.455 sec. Processed 200.00 million rows, 1.60 GB (439.11 million rows/s., 3.51 GB/s.)

insert into deleted_tenants values(1),(2),(3);

Q1) SELECT tenant, count() FROM test_delete GROUP BY tenant ORDER BY tenant LIMIT 6;

┌─tenant─┬──count()─┐

│ 0 │ 20020000 │

│ 4 │ 20020000 │

│ 5 │ 20000 │

│ 6 │ 20000 │

│ 7 │ 20000 │

│ 8 │ 20000 │

└────────┴──────────┘

6 rows in set. Elapsed: 0.329 sec. Processed 140.08 million rows, 1.12 GB (426.34 million rows/s., 3.41 GB/s.)

Q2) SELECT uniq(value_a) FROM test_delete where tenant = 4;

┌─uniq(value_a)─┐

│ 20016427 │

└───────────────┘

1 row in set. Elapsed: 0.287 sec. Processed 20.23 million rows, 863.93 MB (70.56 million rows/s., 3.01 GB/s.)

Q3) SELECT max(ts) FROM test_delete where tenant = 4;

┌─────────────max(ts)─┐

│ 2020-04-25 17:46:39 │

└─────────────────────┘

1 row in set. Elapsed: 0.080 sec. Processed 20.23 million rows, 242.31 MB (251.39 million rows/s., 3.01 GB/s.)

Q4) SELECT max(ts) FROM test_delete where tenant = 4 and key = 444;

┌─────────────max(ts)─┐

│ 2020-01-01 00:00:44 │

└─────────────────────┘

1 row in set. Elapsed: 0.010 sec. Processed 213.00 thousand rows, 3.44 MB (20.33 million rows/s., 328.44 MB/s.)

Q5) SELECT uniq(value_a) FROM test_delete where tenant = 1;

┌─uniq(value_a)─┐

│ 0 │

└───────────────┘

1 row in set. Elapsed: 0.008 sec. Processed 180.23 thousand rows, 1.44 MB (22.11 million rows/s., 176.90 MB/s.)

DROP ROW POLICY pol1 ON test_delete;

DROP TABLE deleted_tenants;

row policy using external dictionary (NOT dictHas)

create table deleted_tenants(tenant Int64, deleted UInt8 default 1) ENGINE=MergeTree order by tenant;

insert into deleted_tenants(tenant) values(1),(2),(3);

CREATE DICTIONARY deleted_tenants_dict (tenant UInt64, deleted UInt8)

PRIMARY KEY tenant SOURCE(CLICKHOUSE(TABLE deleted_tenants))

LIFETIME(600) LAYOUT(FLAT());

CREATE ROW POLICY pol1 ON test_delete USING NOT dictHas('deleted_tenants_dict', tenant) TO all;

Q1) SELECT tenant, count() FROM test_delete GROUP BY tenant ORDER BY tenant LIMIT 6;

┌─tenant─┬──count()─┐

│ 0 │ 20020000 │

│ 4 │ 20020000 │

│ 5 │ 20000 │

│ 6 │ 20000 │

│ 7 │ 20000 │

│ 8 │ 20000 │

└────────┴──────────┘

6 rows in set. Elapsed: 0.388 sec. Processed 200.00 million rows, 1.60 GB (515.79 million rows/s., 4.13 GB/s.)

Q2) SELECT uniq(value_a) FROM test_delete where tenant = 4;

┌─uniq(value_a)─┐

│ 20016427 │

└───────────────┘

1 row in set. Elapsed: 0.291 sec. Processed 20.23 million rows, 863.93 MB (69.47 million rows/s., 2.97 GB/s.)

Q3) SELECT max(ts) FROM test_delete where tenant = 4;

┌─────────────max(ts)─┐

│ 2020-04-25 17:46:39 │

└─────────────────────┘

1 row in set. Elapsed: 0.084 sec. Processed 20.23 million rows, 242.31 MB (240.07 million rows/s., 2.88 GB/s.)

Q4) SELECT max(ts) FROM test_delete where tenant = 4 and key = 444;

┌─────────────max(ts)─┐

│ 2020-01-01 00:00:44 │

└─────────────────────┘

1 row in set. Elapsed: 0.010 sec. Processed 212.99 thousand rows, 3.44 MB (21.45 million rows/s., 346.56 MB/s.)

Q5) SELECT uniq(value_a) FROM test_delete where tenant = 1;

┌─uniq(value_a)─┐

│ 0 │

└───────────────┘

1 row in set. Elapsed: 0.046 sec. Processed 20.22 million rows, 161.74 MB (440.26 million rows/s., 3.52 GB/s.)

DROP ROW POLICY pol1 ON test_delete;

DROP DICTIONARY deleted_tenants_dict;

DROP TABLE deleted_tenants;

row policy using external dictionary (dictHas)

create table deleted_tenants(tenant Int64, deleted UInt8 default 1) ENGINE=MergeTree order by tenant;

insert into deleted_tenants(tenant) select distinct tenant from test_delete where tenant not in (1,2,3);

CREATE DICTIONARY deleted_tenants_dict (tenant UInt64, deleted UInt8)

PRIMARY KEY tenant SOURCE(CLICKHOUSE(TABLE deleted_tenants))

LIFETIME(600) LAYOUT(FLAT());

CREATE ROW POLICY pol1 ON test_delete USING dictHas('deleted_tenants_dict', tenant) TO all;

Q1) SELECT tenant, count() FROM test_delete GROUP BY tenant ORDER BY tenant LIMIT 6;

┌─tenant─┬──count()─┐

│ 0 │ 20020000 │

│ 4 │ 20020000 │

│ 5 │ 20000 │

│ 6 │ 20000 │

│ 7 │ 20000 │

│ 8 │ 20000 │

└────────┴──────────┘

6 rows in set. Elapsed: 0.399 sec. Processed 200.00 million rows, 1.60 GB (501.18 million rows/s., 4.01 GB/s.)

Q2) SELECT uniq(value_a) FROM test_delete where tenant = 4;

┌─uniq(value_a)─┐

│ 20016427 │

└───────────────┘

1 row in set. Elapsed: 0.284 sec. Processed 20.23 million rows, 863.93 MB (71.30 million rows/s., 3.05 GB/s.)

Q3) SELECT max(ts) FROM test_delete where tenant = 4;

┌─────────────max(ts)─┐

│ 2020-04-25 17:46:39 │

└─────────────────────┘

1 row in set. Elapsed: 0.080 sec. Processed 20.23 million rows, 242.31 MB (251.88 million rows/s., 3.02 GB/s.)

Q4) SELECT max(ts) FROM test_delete where tenant = 4 and key = 444;

┌─────────────max(ts)─┐

│ 2020-01-01 00:00:44 │

└─────────────────────┘

1 row in set. Elapsed: 0.010 sec. Processed 212.99 thousand rows, 3.44 MB (22.01 million rows/s., 355.50 MB/s.)

Q5) SELECT uniq(value_a) FROM test_delete where tenant = 1;

┌─uniq(value_a)─┐

│ 0 │

└───────────────┘

1 row in set. Elapsed: 0.034 sec. Processed 20.22 million rows, 161.74 MB (589.90 million rows/s., 4.72 GB/s.)

DROP ROW POLICY pol1 ON test_delete;

DROP DICTIONARY deleted_tenants_dict;

DROP TABLE deleted_tenants;

row policy using engine=Set

create table deleted_tenants(tenant Int64) ENGINE=Set;

insert into deleted_tenants(tenant) values(1),(2),(3);

CREATE ROW POLICY pol1 ON test_delete USING tenant not in deleted_tenants TO all;

Q1) SELECT tenant, count() FROM test_delete GROUP BY tenant ORDER BY tenant LIMIT 6;

┌─tenant─┬──count()─┐

│ 0 │ 20020000 │

│ 4 │ 20020000 │

│ 5 │ 20000 │

│ 6 │ 20000 │

│ 7 │ 20000 │

│ 8 │ 20000 │

└────────┴──────────┘

6 rows in set. Elapsed: 0.322 sec. Processed 200.00 million rows, 1.60 GB (621.38 million rows/s., 4.97 GB/s.)

Q2) SELECT uniq(value_a) FROM test_delete where tenant = 4;

┌─uniq(value_a)─┐

│ 20016427 │

└───────────────┘

1 row in set. Elapsed: 0.275 sec. Processed 20.23 million rows, 863.93 MB (73.56 million rows/s., 3.14 GB/s.)

Q3) SELECT max(ts) FROM test_delete where tenant = 4;

┌─────────────max(ts)─┐

│ 2020-04-25 17:46:39 │

└─────────────────────┘

1 row in set. Elapsed: 0.084 sec. Processed 20.23 million rows, 242.31 MB (240.07 million rows/s., 2.88 GB/s.)

Q4) SELECT max(ts) FROM test_delete where tenant = 4 and key = 444;

┌─────────────max(ts)─┐

│ 2020-01-01 00:00:44 │

└─────────────────────┘

1 row in set. Elapsed: 0.010 sec. Processed 212.99 thousand rows, 3.44 MB (20.69 million rows/s., 334.18 MB/s.)

Q5) SELECT uniq(value_a) FROM test_delete where tenant = 1;

┌─uniq(value_a)─┐

│ 0 │

└───────────────┘

1 row in set. Elapsed: 0.030 sec. Processed 20.22 million rows, 161.74 MB (667.06 million rows/s., 5.34 GB/s.)

DROP ROW POLICY pol1 ON test_delete;

DROP TABLE deleted_tenants;

results

expression: CREATE ROW POLICY pol1 ON test_delete USING tenant not in (1,2,3) TO all;

table subq: CREATE ROW POLICY pol1 ON test_delete USING tenant not in deleted_tenants TO all;

ext. dict. NOT dictHas : CREATE ROW POLICY pol1 ON test_delete USING NOT dictHas('deleted_tenants_dict', tenant) TO all;

ext. dict. dictHas :

| Q | no policy | expression | table subq | ext. dict. NOT | ext. dict. | engine=Set |

|---|---|---|---|---|---|---|

| Q1 | 0.285 / 200.00m | 0.333 / 140.08m | 0.329 / 140.08m | 0.388 / 200.00m | 0.399 / 200.00m | 0.322 / 200.00m |

| Q2 | 0.265 / 20.23m | 0.287 / 20.23m | 0.287 / 20.23m | 0.291 / 20.23m | 0.284 / 20.23m | 0.275 / 20.23m |

| Q3 | 0.062 / 20.23m | 0.080 / 20.23m | 0.080 / 20.23m | 0.084 / 20.23m | 0.080 / 20.23m | 0.084 / 20.23m |

| Q4 | 0.009 / 212.99t | 0.011 / 212.99t | 0.010 / 213.00t | 0.010 / 212.99t | 0.010 / 212.99t | 0.010 / 212.99t |

| Q5 | 0.008 / 180.22t | 0.008 / 180.23t | 0.046 / 20.22m | 0.034 / 20.22m | 0.030 / 20.22m |

Expression in row policy seems to be fastest way (Q1, Q5).

8 - Why is simple `SELECT count()` Slow in ClickHouse®?

ClickHouse is a columnar database that provides excellent performance for analytical queries. However, in some cases, a simple count query can be slow. In this article, we’ll explore the reasons why this can happen and how to optimize the query.

Three Strategies for Counting Rows in ClickHouse

There are three ways to count rows in a table in ClickHouse:

optimize_trivial_count_query: This strategy extracts the number of rows from the table metadata. It’s the fastest and most efficient way to count rows, but it only works for simple count queries.allow_experimental_projection_optimization: This strategy uses a virtual projection called _minmax_count_projection to count rows. It’s faster than scanning the table but slower than the trivial count query.Scanning the smallest column in the table and reading rows from that. This is the slowest strategy and is only used when the other two strategies can’t be used.

Why Does ClickHouse Sometimes Choose the Slowest Counting Strategy?

In some cases, ClickHouse may choose the slowest counting strategy even when there are faster options available. Here are some possible reasons why this can happen:

Row policies are used on the table: If row policies are used, ClickHouse needs to filter rows to give the proper count. You can check if row policies are used by selecting from system.row_policies.

Experimental light-weight delete feature was used on the table: If the experimental light-weight delete feature was used, ClickHouse may use the slowest counting strategy. You can check this by looking into parts_columns for the column named _row_exists. To do this, run the following query:

SELECT DISTINCT database, table FROM system.parts_columns WHERE column = '_row_exists';

You can also refer to this issue on GitHub for more information: https://github.com/ClickHouse/ClickHouse/issues/47930 .

SELECT FINALorfinal=1setting is used.max_parallel_replicas > 1is used.Sampling is used.

Some other features like

allow_experimental_query_deduplicationorempty_result_for_aggregation_by_empty_setis used.

9 - Collecting query execution flamegraphs using system.trace_log

ClickHouse® has embedded functionality to analyze the details of query performance.

It’s system.trace_log table.

By default it collects information only about queries when runs longer than 1 sec (and collects stacktraces every second).

You can adjust that per query using settings query_profiler_real_time_period_ns & query_profiler_cpu_time_period_ns.

Both works very similar (with desired interval dump the stacktraces of all the threads which execute the query). real timer - allows to ‘see’ the situations when cpu was not working much, but time was spend for example on IO. cpu timer - allows to see the ‘hot’ points in calculations more accurately (skip the io time).

Trying to collect stacktraces with a frequency higher than few KHz is usually not possible.

To check where most of the RAM is used you can collect stacktraces during memory allocations / deallocation, by using the

setting memory_profiler_sample_probability.

clickhouse-speedscope

# install

wget https://github.com/laplab/clickhouse-speedscope/archive/refs/heads/master.tar.gz -O clickhouse-speedscope.tar.gz

tar -xvzf clickhouse-speedscope.tar.gz

cd clickhouse-speedscope-master/

pip3 install -r requirements.txt

For debugging particular query:

clickhouse-client

SET query_profiler_cpu_time_period_ns=1000000; -- 1000 times per 'cpu' sec

-- or SET query_profiler_real_time_period_ns=2000000; -- 500 times per 'real' sec.

-- or SET memory_profiler_sample_probability=0.1; -- to debug the memory allocations

SELECT ... <your select>

SYSTEM FLUSH LOGS;

-- get the query_id from the clickhouse-client output or from system.query_log (also pay attention on query_id vs initial_query_id for distributed queries).

Now let’s process that:

python3 main.py & # start the proxy in background

python3 main.py --query-id 908952ee-71a8-48a4-84d5-f4db92d45a5d # process the stacktraces

fg # get the proxy from background

Ctrl + C # stop it.

To access ClickHouse with other username / password etc. - see the sources of https://github.com/laplab/clickhouse-speedscope/blob/master/main.py

clickhouse-flamegraph

Installation & usage instructions: https://github.com/Slach/clickhouse-flamegraph

pure flamegraph.pl examples

git clone https://github.com/brendangregg/FlameGraph /opt/flamegraph

clickhouse-client -q "SELECT arrayStringConcat(arrayReverse(arrayMap(x -> concat( addressToLine(x), '#', demangle(addressToSymbol(x)) ), trace)), ';') AS stack, count() AS samples FROM system.trace_log WHERE event_time >= subtractMinutes(now(),10) GROUP BY trace FORMAT TabSeparated" | /opt/flamegraph/flamegraph.pl > flamegraph.svg

clickhouse-client -q "SELECT arrayStringConcat((arrayMap(x -> concat(splitByChar('/', addressToLine(x))[-1], '#', demangle(addressToSymbol(x)) ), trace)), ';') AS stack, sum(abs(size)) AS samples FROM system.trace_log where trace_type = 'Memory' and event_date = today() group by trace order by samples desc FORMAT TabSeparated" | /opt/flamegraph/flamegraph.pl > allocs.svg

clickhouse-client -q "SELECT arrayStringConcat(arrayReverse(arrayMap(x -> concat(splitByChar('/', addressToLine(x))[-1], '#', demangle(addressToSymbol(x)) ), trace)), ';') AS stack, count() AS samples FROM system.trace_log where trace_type = 'Memory' group by trace FORMAT TabSeparated SETTINGS allow_introspection_functions=1" | /opt/flamegraph/flamegraph.pl > ~/mem1.svg

similar using perf

apt-get update -y

apt-get install -y linux-tools-common linux-tools-generic linux-tools-`uname -r`git

apt-get install -y clickhouse-common-static-dbg clickhouse-common-dbg

mkdir -p /opt/flamegraph

git clone https://github.com/brendangregg/FlameGraph /opt/flamegraph

perf record -F 99 -p $(pidof clickhouse) -G

perf script > /tmp/out.perf

/opt/flamegraph/stackcollapse-perf.pl /tmp/out.perf | /opt/flamegraph/flamegraph.pl > /tmp/flamegraph.svg

also

https://kb.altinity.com/altinity-kb-queries-and-syntax/troubleshooting/#flamegraph

10 - Using array functions to mimic window-functions alike behavior

There are cases where you may need to mimic window functions using arrays in ClickHouse. This could be for optimization purposes, to better manage memory, or to enable on-disk spilling, especially if you’re working with an older version of ClickHouse that doesn’t natively support window functions.

Here’s an example demonstrating how to mimic a window function like runningDifference() using arrays:

Step 1: Create Sample Data

We’ll start by creating a test table with some sample data:

DROP TABLE IS EXISTS test_running_difference

CREATE TABLE test_running_difference

ENGINE = Log AS

SELECT

number % 20 AS id,

toDateTime('2010-01-01 00:00:00') + (intDiv(number, 20) * 15) AS ts,

(number * round(xxHash32(number % 20) / 1000000)) - round(rand() / 1000000) AS val

FROM numbers(100)

SELECT * FROM test_running_difference;

┌─id─┬──────────────────ts─┬────val─┐

│ 0 │ 2010-01-01 00:00:00 │ -1209 │

│ 1 │ 2010-01-01 00:00:00 │ 43 │

│ 2 │ 2010-01-01 00:00:00 │ 4322 │

│ 3 │ 2010-01-01 00:00:00 │ -25 │

│ 4 │ 2010-01-01 00:00:00 │ 13720 │

│ 5 │ 2010-01-01 00:00:00 │ 903 │

│ 6 │ 2010-01-01 00:00:00 │ 18062 │

│ 7 │ 2010-01-01 00:00:00 │ -2873 │

│ 8 │ 2010-01-01 00:00:00 │ 6286 │

│ 9 │ 2010-01-01 00:00:00 │ 13399 │

│ 10 │ 2010-01-01 00:00:00 │ 18320 │

│ 11 │ 2010-01-01 00:00:00 │ 11731 │

│ 12 │ 2010-01-01 00:00:00 │ 857 │

│ 13 │ 2010-01-01 00:00:00 │ 8752 │

│ 14 │ 2010-01-01 00:00:00 │ 23060 │

│ 15 │ 2010-01-01 00:00:00 │ 41902 │

│ 16 │ 2010-01-01 00:00:00 │ 39406 │

│ 17 │ 2010-01-01 00:00:00 │ 50010 │

│ 18 │ 2010-01-01 00:00:00 │ 57673 │

│ 19 │ 2010-01-01 00:00:00 │ 51389 │

│ 0 │ 2010-01-01 00:00:15 │ 66839 │

│ 1 │ 2010-01-01 00:00:15 │ 19440 │

│ 2 │ 2010-01-01 00:00:15 │ 74513 │

│ 3 │ 2010-01-01 00:00:15 │ 10542 │

│ 4 │ 2010-01-01 00:00:15 │ 94245 │

│ 5 │ 2010-01-01 00:00:15 │ 8230 │

│ 6 │ 2010-01-01 00:00:15 │ 87823 │

│ 7 │ 2010-01-01 00:00:15 │ -128 │

│ 8 │ 2010-01-01 00:00:15 │ 30101 │

│ 9 │ 2010-01-01 00:00:15 │ 54321 │

│ 10 │ 2010-01-01 00:00:15 │ 64078 │

│ 11 │ 2010-01-01 00:00:15 │ 31886 │

│ 12 │ 2010-01-01 00:00:15 │ 8749 │

│ 13 │ 2010-01-01 00:00:15 │ 28982 │

│ 14 │ 2010-01-01 00:00:15 │ 61299 │

│ 15 │ 2010-01-01 00:00:15 │ 95867 │

│ 16 │ 2010-01-01 00:00:15 │ 93667 │

│ 17 │ 2010-01-01 00:00:15 │ 114072 │

│ 18 │ 2010-01-01 00:00:15 │ 124279 │

│ 19 │ 2010-01-01 00:00:15 │ 109605 │

│ 0 │ 2010-01-01 00:00:30 │ 135082 │

│ 1 │ 2010-01-01 00:00:30 │ 37345 │

│ 2 │ 2010-01-01 00:00:30 │ 148744 │

│ 3 │ 2010-01-01 00:00:30 │ 21607 │

│ 4 │ 2010-01-01 00:00:30 │ 171744 │

│ 5 │ 2010-01-01 00:00:30 │ 14736 │

│ 6 │ 2010-01-01 00:00:30 │ 155349 │

│ 7 │ 2010-01-01 00:00:30 │ -3901 │

│ 8 │ 2010-01-01 00:00:30 │ 54303 │

│ 9 │ 2010-01-01 00:00:30 │ 89629 │

│ 10 │ 2010-01-01 00:00:30 │ 106595 │

│ 11 │ 2010-01-01 00:00:30 │ 54545 │

│ 12 │ 2010-01-01 00:00:30 │ 18903 │

│ 13 │ 2010-01-01 00:00:30 │ 48023 │

│ 14 │ 2010-01-01 00:00:30 │ 97930 │

│ 15 │ 2010-01-01 00:00:30 │ 152165 │

│ 16 │ 2010-01-01 00:00:30 │ 146130 │

│ 17 │ 2010-01-01 00:00:30 │ 174854 │

│ 18 │ 2010-01-01 00:00:30 │ 189194 │

│ 19 │ 2010-01-01 00:00:30 │ 170134 │

│ 0 │ 2010-01-01 00:00:45 │ 207471 │

│ 1 │ 2010-01-01 00:00:45 │ 54323 │

│ 2 │ 2010-01-01 00:00:45 │ 217984 │

│ 3 │ 2010-01-01 00:00:45 │ 31835 │

│ 4 │ 2010-01-01 00:00:45 │ 252709 │

│ 5 │ 2010-01-01 00:00:45 │ 21493 │

│ 6 │ 2010-01-01 00:00:45 │ 221271 │

│ 7 │ 2010-01-01 00:00:45 │ -488 │

│ 8 │ 2010-01-01 00:00:45 │ 76827 │

│ 9 │ 2010-01-01 00:00:45 │ 131066 │

│ 10 │ 2010-01-01 00:00:45 │ 149087 │

│ 11 │ 2010-01-01 00:00:45 │ 71934 │

│ 12 │ 2010-01-01 00:00:45 │ 25125 │

│ 13 │ 2010-01-01 00:00:45 │ 65274 │

│ 14 │ 2010-01-01 00:00:45 │ 135980 │

│ 15 │ 2010-01-01 00:00:45 │ 210910 │

│ 16 │ 2010-01-01 00:00:45 │ 200007 │