This is the multi-page printable view of this section. Click here to print.

Setup & maintenance

- 1: S3 & object storage

- 1.1: AWS S3 Recipes

- 1.2: Clean up orphaned objects on s3

- 1.3: How much data are written to S3 during mutations

- 1.4: Example of the table at s3 with cache

- 1.5: S3Disk

- 2: AggregateFunction(uniq, UUID) doubled after ClickHouse® upgrade

- 3: Can not connect to my ClickHouse® server

- 4: cgroups and kubernetes cloud providers

- 5: Transforming ClickHouse logs to ndjson using Vector.dev

- 6: Altinity Kubernetes Operator For ClickHouse®

- 7: ClickHouse® and different filesystems

- 8: ClickHouse® Access Control and Account Management (RBAC)

- 9: Client Timeouts

- 10: Compatibility layer for the Altinity Kubernetes Operator for ClickHouse®

- 11: How to convert uniqExact states to approximate uniq functions states

- 12: Custom Settings

- 13: Description of asynchronous_metrics

- 14: ClickHouse® data/disk encryption (at rest)

- 15: DR two DC

- 16: How ALTERs work in ClickHouse®

- 17: How to recreate a table in case of total corruption of the replication queue

- 18: http handler example

- 19: Jemalloc heap profiling

- 20: Logging

- 21: High Memory Usage During Merge in system.metric_log

- 22: Precreate parts using clickhouse-local

- 23: Recovery after complete data loss

- 24: Replication: Can not resolve host of another ClickHouse® server

- 25: source parts size is greater than the current maximum

- 26: Successful ClickHouse® deployment plan

- 27: sysall database (system tables on a cluster level)

- 28: Timeouts during OPTIMIZE FINAL

- 29: Use an executable dictionary as cron task

- 30: Useful settings to turn on/Defaults that should be reconsidered

- 31: Who ate my CPU

- 32: Zookeeper session has expired

- 33: Server configuration files

- 34: Aggressive merges

- 35: Altinity Backup for ClickHouse®

- 36: Altinity packaging compatibility >21.x and earlier

- 37: AWS EC2 Storage

- 38: ClickHouse® in Docker

- 39: ClickHouse® Monitoring

- 40: ClickHouse® versions

- 41: Configure ClickHouse® for low memory environments

- 42: Converting MergeTree to Replicated

- 43: Data Migration

- 43.1: MSSQL bcp pipe to clickhouse-client

- 43.2: Add/Remove a new replica to a ClickHouse® cluster

- 43.3: clickhouse-copier

- 43.3.1: clickhouse-copier 20.3 and earlier

- 43.3.2: clickhouse-copier 20.4 - 21.6

- 43.3.3: Kubernetes job for clickhouse-copier

- 43.4: Distributed table to ClickHouse® Cluster

- 43.5: Fetch Alter Table

- 43.6: Remote table function

- 43.7: Moving ClickHouse to Another Server

- 44: DDLWorker and DDL queue problems

- 45: differential backups using clickhouse-backup

- 46: High CPU usage in ClickHouse®

- 47: Load balancers

- 48: memory configuration settings

- 49: Memory Overcommiter

- 50: Moving a table to another device

- 51: Object consistency in a cluster

- 52: Production Cluster Configuration Guide

- 52.1: Backups

- 52.2: Cluster Configuration FAQ

- 52.3: Cluster Configuration Process

- 52.4: Hardware Requirements

- 52.5: Network Configuration

- 53: System tables ate my disk

- 54: ClickHouse® Replication problems

- 55: Replication queue

- 56: Schema migration tools for ClickHouse®

- 56.1: golang-migrate

- 57: Settings to adjust

- 58: Shutting down a node

- 59: SSL connection unexpectedly closed

- 60: Suspiciously many broken parts

- 61: Threads

- 62: Who ate my ClickHouse® memory?

- 63: X rows of Y total rows in filesystem are suspicious

- 64: ZooKeeper

- 64.1: clickhouse-keeper-initd

- 64.2: clickhouse-keeper-service

- 64.3: Install standalone Zookeeper for ClickHouse® on Ubuntu / Debian

- 64.4: How to check the list of watches

- 64.5: JVM sizes and garbage collector settings

- 64.6: Proper setup

- 64.7: Recovering from complete metadata loss in ZooKeeper

- 64.8: Using clickhouse-keeper

- 64.9: ZooKeeper backup

- 64.10: ZooKeeper cluster migration

- 64.11: ZooKeeper cluster migration when using K8s node local storage

- 64.12: ZooKeeper Monitoring

- 64.13: ZooKeeper schema

1 - S3 & object storage

1.1 - AWS S3 Recipes

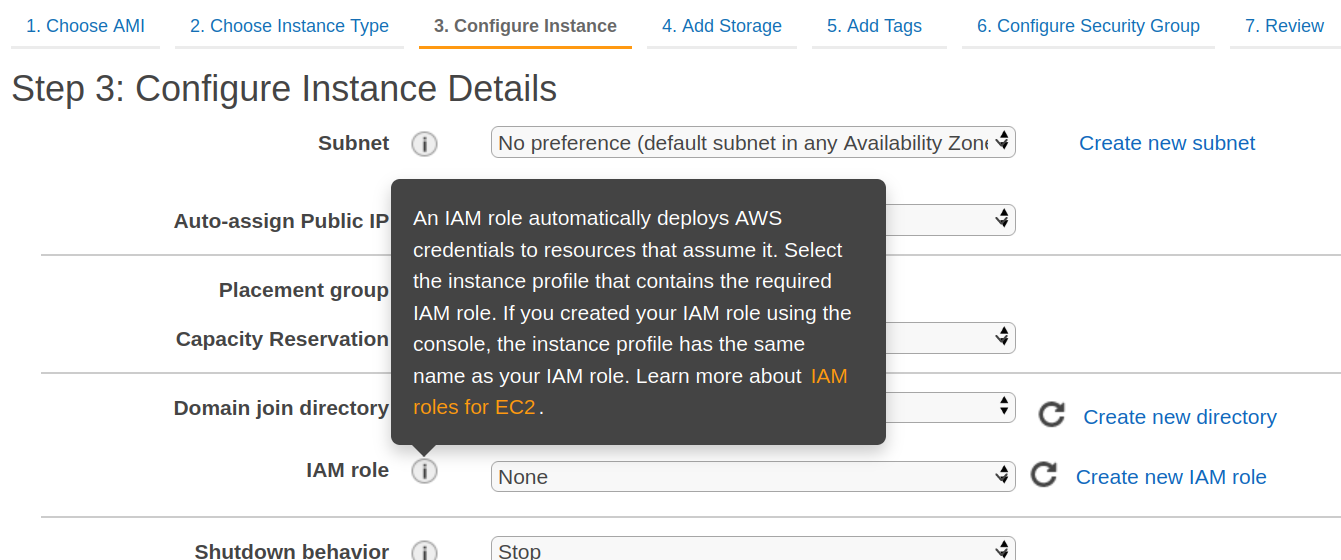

Using AWS IAM — Identity and Access Management roles

For EC2 instance, there is an option to configure an IAM role:

Role shall contain a policy with permissions like:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "allow-put-and-get",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject"

],

"Resource": "arn:aws:s3:::BUCKET_NAME/test_s3_disk/*"

}

]

}

Corresponding configuration of ClickHouse®:

<clickhouse>

<storage_configuration>

<disks>

<disk_s3>

<type>s3</type>

<endpoint>http://s3.us-east-1.amazonaws.com/BUCKET_NAME/test_s3_disk/</endpoint>

<use_environment_credentials>true</use_environment_credentials>

</disk_s3>

</disks>

<policies>

<policy_s3_only>

<volumes>

<volume_s3>

<disk>disk_s3</disk>

</volume_s3>

</volumes>

</policy_s3_only>

</policies>

</storage_configuration>

</clickhouse>

Small check:

CREATE TABLE table_s3 (number Int64) ENGINE=MergeTree() ORDER BY tuple() PARTITION BY tuple() SETTINGS storage_policy='policy_s3_only';

INSERT INTO table_s3 SELECT * FROM system.numbers LIMIT 100000000;

SELECT * FROM table_s3;

DROP TABLE table_s3;

How to use AWS IRSA and IAM in the Altinity Kubernetes Operator for ClickHouse to allow S3 backup without Explicit credentials

Install clickhouse-operator https://github.com/Altinity/clickhouse-operator/tree/master/docs/operator_installation_details.md

Create Role

Create service account with annotations

apiVersion: v1

kind: ServiceAccount

metadata:

name: <SERVICE ACOUNT NAME>

namespace: <NAMESPACE>

annotations:

eks.amazonaws.com/role-arn: arn:aws:iam::<ACCOUNT_ID>:role/<ROLE_NAME>

Link service account to podTemplate it will create AWS_ROLE_ARN and AWS_WEB_IDENTITY_TOKEN_FILE environment variables.

apiVersion: "clickhouse.altinity.com/v1"

kind: "ClickHouseInstallation"

metadata:

name: <NAME>

namespace: <NAMESPACE>

spec:

defaults:

templates:

podTemplate: <POD_TEMPLATE_NAME>

templates:

podTemplates:

- name: <POD_TEMPLATE_NAME>

spec:

serviceAccountName: <SERVICE ACCOUNT NAME>

containers:

- name: clickhouse-backup

For EC2 instances the same environment variables should be created:

AWS_ROLE_ARN=arn:aws:iam::<ACCOUNT_ID>:role/<ROLE_NAME>

AWS_WEB_IDENTITY_TOKEN_FILE=/var/run/secrets/eks.amazonaws.com/serviceaccount/token

1.2 - Clean up orphaned objects on s3

Problems

- TRUNCATE and DROP TABLE remove metadata only.

- Long-running queries, merges or other replicas may still reference parts, so ClickHouse delays removal.

- There are bugs in Clickhouse that leave orphaned files, especially after failures.

Solutions

- use our utility for garbage collection - https://github.com/Altinity/s3gc

- or create a separate path in the bucket for every table and every replica and remove the whole path in AWS console

- you can also use clickhouse-disk utility to delete s3 data:

clickhouse-disks --disk s3 --query "remove /cluster/database/table/replica1"

1.3 - How much data are written to S3 during mutations

Configuration

S3 disk with disabled merges

<clickhouse>

<storage_configuration>

<disks>

<s3disk>

<type>s3</type>

<endpoint>https://s3.us-east-1.amazonaws.com/mybucket/test/test/</endpoint>

<use_environment_credentials>1</use_environment_credentials> <!-- use IAM AWS role -->

<!--access_key_id>xxxx</access_key_id>

<secret_access_key>xxx</secret_access_key-->

</s3disk>

</disks>

<policies>

<s3tiered>

<volumes>

<default>

<disk>default</disk>

</default>

<s3disk>

<disk>s3disk</disk>

<prefer_not_to_merge>true</prefer_not_to_merge>

</s3disk>

</volumes>

</s3tiered>

</policies>

</storage_configuration>

</clickhouse>

Let’s create a table and load some synthetic data.

CREATE TABLE test_s3

(

`A` Int64,

`S` String,

`D` Date

)

ENGINE = MergeTree

PARTITION BY D

ORDER BY A

SETTINGS storage_policy = 's3tiered';

insert into test_s3 select number, number, today() - intDiv(number, 10000000) from numbers(7e8);

0 rows in set. Elapsed: 98.091 sec. Processed 700.36 million rows, 5.60 GB (7.14 million rows/s., 57.12 MB/s.)

select disk_name, partition, sum(rows), formatReadableSize(sum(bytes_on_disk)) size, count() part_count

from system.parts where table= 'test_s3' and active

group by disk_name, partition

order by partition;

┌─disk_name─┬─partition──┬─sum(rows)─┬─size──────┬─part_count─┐

│ default │ 2023-05-06 │ 10000000 │ 78.23 MiB │ 5 │

│ default │ 2023-05-07 │ 10000000 │ 78.31 MiB │ 6 │

│ default │ 2023-05-08 │ 10000000 │ 78.16 MiB │ 5 │

....

│ default │ 2023-07-12 │ 10000000 │ 78.21 MiB │ 5 │

│ default │ 2023-07-13 │ 10000000 │ 78.23 MiB │ 6 │

│ default │ 2023-07-14 │ 10000000 │ 77.39 MiB │ 5 │

└───────────┴────────────┴───────────┴───────────┴────────────┘

70 rows in set. Elapsed: 0.023 sec.

Performance of mutations for a local EBS (throughput: 500 MB/s)

select * from test_s3 where A=490000000;

1 row in set. Elapsed: 0.020 sec. Processed 8.19 thousand rows, 92.67 KB (419.17 thousand rows/s., 4.74 MB/s.)

select * from test_s3 where S='490000000';

1 row in set. Elapsed: 14.117 sec. Processed 700.00 million rows, 12.49 GB (49.59 million rows/s., 884.68 MB/s.)

delete from test_s3 where S = '490000000';

0 rows in set. Elapsed: 22.192 sec.

delete from test_s3 where A = '490000001';

0 rows in set. Elapsed: 2.243 sec.

alter table test_s3 delete where S = 590000000 settings mutations_sync=2;

0 rows in set. Elapsed: 21.387 sec.

alter table test_s3 delete where A = '590000001' settings mutations_sync=2;

0 rows in set. Elapsed: 3.372 sec.

alter table test_s3 update S='' where S = '690000000' settings mutations_sync=2;

0 rows in set. Elapsed: 20.265 sec.

alter table test_s3 update S='' where A = '690000001' settings mutations_sync=2;

0 rows in set. Elapsed: 1.979 sec.

Let’s move data to S3

alter table test_s3 modify TTL D + interval 10 day to disk 's3disk';

-- 10 minutes later

┌─disk_name─┬─partition──┬─sum(rows)─┬─size──────┬─part_count─┐

│ s3disk │ 2023-05-06 │ 10000000 │ 78.23 MiB │ 5 │

│ s3disk │ 2023-05-07 │ 10000000 │ 78.31 MiB │ 6 │

│ s3disk │ 2023-05-08 │ 10000000 │ 78.16 MiB │ 5 │

│ s3disk │ 2023-05-09 │ 10000000 │ 78.21 MiB │ 6 │

│ s3disk │ 2023-05-10 │ 10000000 │ 78.21 MiB │ 6 │

...

│ s3disk │ 2023-07-02 │ 10000000 │ 78.22 MiB │ 5 │

...

│ default │ 2023-07-11 │ 10000000 │ 78.20 MiB │ 6 │

│ default │ 2023-07-12 │ 10000000 │ 78.21 MiB │ 5 │

│ default │ 2023-07-13 │ 10000000 │ 78.23 MiB │ 6 │

│ default │ 2023-07-14 │ 10000000 │ 77.40 MiB │ 5 │

└───────────┴────────────┴───────────┴───────────┴────────────┘

70 rows in set. Elapsed: 0.007 sec.

Sizes of a table on S3 and a size of each column

select sum(rows), formatReadableSize(sum(bytes_on_disk)) size

from system.parts where table= 'test_s3' and active and disk_name = 's3disk';

┌─sum(rows)─┬─size─────┐

│ 600000000 │ 4.58 GiB │

└───────────┴──────────┘

SELECT

database,

table,

column,

formatReadableSize(sum(column_data_compressed_bytes) AS size) AS compressed

FROM system.parts_columns

WHERE (active = 1) AND (database LIKE '%') AND (table LIKE 'test_s3') AND (disk_name = 's3disk')

GROUP BY

database,

table,

column

ORDER BY column ASC

┌─database─┬─table───┬─column─┬─compressed─┐

│ default │ test_s3 │ A │ 2.22 GiB │

│ default │ test_s3 │ D │ 5.09 MiB │

│ default │ test_s3 │ S │ 2.33 GiB │

└──────────┴─────────┴────────┴────────────┘

S3 Statistics of selects

select *, _part from test_s3 where A=100000000;

┌─────────A─┬─S─────────┬──────────D─┬─_part──────────────────┐

│ 100000000 │ 100000000 │ 2023-07-08 │ 20230708_106_111_1_738 │

└───────────┴───────────┴────────────┴────────────────────────┘

1 row in set. Elapsed: 0.104 sec. Processed 8.19 thousand rows, 65.56 KB (79.11 thousand rows/s., 633.07 KB/s.)

┌─S3GetObject─┬─S3PutObject─┬─ReadBufferFromS3─┬─WriteBufferFromS3─┐

│ 6 │ 0 │ 70.58 KiB │ 0.00 B │

└─────────────┴─────────────┴──────────────────┴───────────────────┘

Select by primary key read only 70.58 KiB from S3

Size of this part

SELECT

database, table, column,

formatReadableSize(sum(column_data_compressed_bytes) AS size) AS compressed

FROM system.parts_columns

WHERE (active = 1) AND (database LIKE '%') AND (table LIKE 'test_s3') AND (disk_name = 's3disk')

and name = '20230708_106_111_1_738'

GROUP BY database, table, column ORDER BY column ASC

┌─database─┬─table───┬─column─┬─compressed─┐

│ default │ test_s3 │ A │ 22.51 MiB │

│ default │ test_s3 │ D │ 51.47 KiB │

│ default │ test_s3 │ S │ 23.52 MiB │

└──────────┴─────────┴────────┴────────────┘

select * from test_s3 where S='100000000';

┌─────────A─┬─S─────────┬──────────D─┐

│ 100000000 │ 100000000 │ 2023-07-08 │

└───────────┴───────────┴────────────┘

1 row in set. Elapsed: 86.745 sec. Processed 700.00 million rows, 12.49 GB (8.07 million rows/s., 144.04 MB/s.)

┌─S3GetObject─┬─S3PutObject─┬─ReadBufferFromS3─┬─WriteBufferFromS3─┐

│ 947 │ 0 │ 2.36 GiB │ 0.00 B │

└─────────────┴─────────────┴──────────────────┴───────────────────┘

Select using fullscan of S column read only 2.36 GiB from S3, the whole S column (2.33 GiB) plus parts of A and D.

delete from test_s3 where A=100000000;

0 rows in set. Elapsed: 17.429 sec.

┌─q──┬─S3GetObject─┬─S3PutObject─┬─ReadBufferFromS3─┬─WriteBufferFromS3─┐

│ Q3 │ 2981 │ 6 │ 23.06 MiB │ 27.25 KiB │

└────┴─────────────┴─────────────┴──────────────────┴───────────────────┘

insert into test select 'Q3' q, event,value from system.events where event like '%S3%';

delete from test_s3 where S='100000001';

0 rows in set. Elapsed: 31.417 sec.

┌─q──┬─S3GetObject─┬─S3PutObject─┬─ReadBufferFromS3─┬─WriteBufferFromS3─┐

│ Q4 │ 4209 │ 6 │ 2.39 GiB │ 27.25 KiB │

└────┴─────────────┴─────────────┴──────────────────┴───────────────────┘

insert into test select 'Q4' q, event,value from system.events where event like '%S3%';

alter table test_s3 delete where A=110000000 settings mutations_sync=2;

0 rows in set. Elapsed: 19.521 sec.

┌─q──┬─S3GetObject─┬─S3PutObject─┬─ReadBufferFromS3─┬─WriteBufferFromS3─┐

│ Q5 │ 2986 │ 15 │ 42.27 MiB │ 41.72 MiB │

└────┴─────────────┴─────────────┴──────────────────┴───────────────────┘

insert into test select 'Q5' q, event,value from system.events where event like '%S3%';

alter table test_s3 delete where S='110000001' settings mutations_sync=2;

0 rows in set. Elapsed: 29.650 sec.

┌─q──┬─S3GetObject─┬─S3PutObject─┬─ReadBufferFromS3─┬─WriteBufferFromS3─┐

│ Q6 │ 4212 │ 15 │ 2.42 GiB │ 41.72 MiB │

└────┴─────────────┴─────────────┴──────────────────┴───────────────────┘

insert into test select 'Q6' q, event,value from system.events where event like '%S3%';

1.4 - Example of the table at s3 with cache

Storage configuration

cat /etc/clickhouse-server/config.d/s3.xml

<clickhouse>

<storage_configuration>

<disks>

<s3disk>

<type>s3</type>

<endpoint>https://s3.us-east-1.amazonaws.com/mybucket/test/s3cached/</endpoint>

<use_environment_credentials>1</use_environment_credentials> <!-- use IAM AWS role -->

<!--access_key_id>xxxx</access_key_id>

<secret_access_key>xxx</secret_access_key-->

</s3disk>

<cache>

<type>cache</type>

<disk>s3disk</disk>

<path>/var/lib/clickhouse/disks/s3_cache/</path>

<max_size>50Gi</max_size> <!-- 50GB local cache to cache remote data -->

</cache>

</disks>

<policies>

<s3tiered>

<volumes>

<default>

<disk>default</disk>

<max_data_part_size_bytes>50000000000</max_data_part_size_bytes> <!-- only for parts less than 50GB after they moved to s3 during merges -->

</default>

<s3cached>

<disk>cache</disk> <!-- sandwich cache plus s3disk -->

<!-- prefer_not_to_merge>true</prefer_not_to_merge>

<perform_ttl_move_on_insert>false</perform_ttl_move_on_insert-->

</s3cached>

</volumes>

</s3tiered>

</policies>

</storage_configuration>

</clickhouse>

select * from system.disks

┌─name────┬─path──────────────────────────────┬───────────free_space─┬──────────total_space─┬

│ cache │ /var/lib/clickhouse/disks/s3disk/ │ 18446744073709551615 │ 18446744073709551615 │

│ default │ /var/lib/clickhouse/ │ 149113987072 │ 207907635200 │

│ s3disk │ /var/lib/clickhouse/disks/s3disk/ │ 18446744073709551615 │ 18446744073709551615 │

└─────────┴───────────────────────────────────┴──────────────────────┴──────────────────────┴

select * from system.storage_policies;

┌─policy_name─┬─volume_name─┬─volume_priority─┬─disks───────┬─volume_type─┬─max_data_part_size─┬─move_factor─┬─prefer_not_to_merge─┐

│ default │ default │ 1 │ ['default'] │ JBOD │ 0 │ 0 │ 0 │

│ s3tiered │ default │ 1 │ ['default'] │ JBOD │ 50000000000 │ 0.1 │ 0 │

│ s3tiered │ s3cached │ 2 │ ['s3disk'] │ JBOD │ 0 │ 0.1 │ 0 │

└─────────────┴─────────────┴─────────────────┴─────────────┴─────────────┴────────────────────┴─────────────┴─────────────────────┘

example with a new table

CREATE TABLE test_s3

(

`A` Int64,

`S` String,

`D` Date

)

ENGINE = MergeTree

PARTITION BY D

ORDER BY A

SETTINGS storage_policy = 's3tiered';

insert into test_s3 select number, number, '2023-01-01' from numbers(1e9);

0 rows in set. Elapsed: 270.285 sec. Processed 1.00 billion rows, 8.00 GB (3.70 million rows/s., 29.60 MB/s.)

Table size is 7.65 GiB and it at the default disk (EBS):

select disk_name, partition, sum(rows), formatReadableSize(sum(bytes_on_disk)) size, count() part_count

from system.parts where table= 'test_s3' and active

group by disk_name, partition;

┌─disk_name─┬─partition──┬──sum(rows)─┬─size─────┬─part_count─┐

│ default │ 2023-01-01 │ 1000000000 │ 7.65 GiB │ 8 │

└───────────┴────────────┴────────────┴──────────┴────────────┘

It seems my EBS write speed is slower than S3 write speed:

alter table test_s3 move partition '2023-01-01' to volume 's3cached';

0 rows in set. Elapsed: 98.979 sec.

alter table test_s3 move partition '2023-01-01' to volume 'default';

0 rows in set. Elapsed: 127.741 sec.

Queries performance against EBS:

select * from test_s3 where A = 443;

1 row in set. Elapsed: 0.002 sec. Processed 8.19 thousand rows, 71.64 KB (3.36 million rows/s., 29.40 MB/s.)

select uniq(A) from test_s3;

1 row in set. Elapsed: 11.439 sec. Processed 1.00 billion rows, 8.00 GB (87.42 million rows/s., 699.33 MB/s.)

select count() from test_s3 where S like '%4422%'

1 row in set. Elapsed: 17.484 sec. Processed 1.00 billion rows, 17.89 GB (57.20 million rows/s., 1.02 GB/s.)

Let’s move data to S3

alter table test_s3 move partition '2023-01-01' to volume 's3cached';

0 rows in set. Elapsed: 81.068 sec.

select disk_name, partition, sum(rows), formatReadableSize(sum(bytes_on_disk)) size, count() part_count

from system.parts where table= 'test_s3' and active

group by disk_name, partition;

┌─disk_name─┬─partition──┬──sum(rows)─┬─size─────┬─part_count─┐

│ s3disk │ 2023-01-01 │ 1000000000 │ 7.65 GiB │ 8 │

└───────────┴────────────┴────────────┴──────────┴────────────┘

The first query execution against S3, the second against the cache (local EBS):

select * from test_s3 where A = 443;

1 row in set. Elapsed: 0.458 sec. Processed 8.19 thousand rows, 71.64 KB (17.88 thousand rows/s., 156.35 KB/s.)

1 row in set. Elapsed: 0.003 sec. Processed 8.19 thousand rows, 71.64 KB (3.24 million rows/s., 28.32 MB/s.)

select uniq(A) from test_s3;

1 row in set. Elapsed: 26.601 sec. Processed 1.00 billion rows, 8.00 GB (37.59 million rows/s., 300.74 MB/s.)

1 row in set. Elapsed: 8.675 sec. Processed 1.00 billion rows, 8.00 GB (115.27 million rows/s., 922.15 MB/s.)

select count() from test_s3 where S like '%4422%'

1 row in set. Elapsed: 33.586 sec. Processed 1.00 billion rows, 17.89 GB (29.77 million rows/s., 532.63 MB/s.)

1 row in set. Elapsed: 16.551 sec. Processed 1.00 billion rows, 17.89 GB (60.42 million rows/s., 1.08 GB/s.)

Cache introspection

select cache_base_path, formatReadableSize(sum(size)) from system.filesystem_cache group by 1;

┌─cache_base_path─────────────────────┬─formatReadableSize(sum(size))─┐

│ /var/lib/clickhouse/disks/s3_cache/ │ 7.64 GiB │

└─────────────────────────────────────┴───────────────────────────────┘

system drop FILESYSTEM cache;

select cache_base_path, formatReadableSize(sum(size)) from system.filesystem_cache group by 1;

0 rows in set. Elapsed: 0.005 sec.

select * from test_s3 where A = 443;

1 row in set. Elapsed: 0.221 sec. Processed 8.19 thousand rows, 71.64 KB (37.10 thousand rows/s., 324.47 KB/s.)

select cache_base_path, formatReadableSize(sum(size)) from system.filesystem_cache group by 1;

┌─cache_base_path─────────────────────┬─formatReadableSize(sum(size))─┐

│ /var/lib/clickhouse/disks/s3_cache/ │ 105.95 KiB │

└─────────────────────────────────────┴───────────────────────────────┘

No data is stored locally (except system log tables).

select name, formatReadableSize(free_space) free_space, formatReadableSize(total_space) total_space from system.disks;

┌─name────┬─free_space─┬─total_space─┐

│ cache │ 16.00 EiB │ 16.00 EiB │

│ default │ 48.97 GiB │ 49.09 GiB │

│ s3disk │ 16.00 EiB │ 16.00 EiB │

└─────────┴────────────┴─────────────┘

example with an existing table

The mydata table is created without the explicitly defined storage_policy, it means that implicitly storage_policy=default / volume=default / disk=default.

select disk_name, partition, sum(rows), formatReadableSize(sum(bytes_on_disk)) size, count() part_count

from system.parts where table='mydata' and active

group by disk_name, partition

order by partition;

┌─disk_name─┬─partition─┬─sum(rows)─┬─size───────┬─part_count─┐

│ default │ 202201 │ 516666677 │ 4.01 GiB │ 13 │

│ default │ 202202 │ 466666657 │ 3.64 GiB │ 13 │

│ default │ 202203 │ 16666666 │ 138.36 MiB │ 10 │

│ default │ 202301 │ 516666677 │ 4.01 GiB │ 10 │

│ default │ 202302 │ 466666657 │ 3.64 GiB │ 10 │

│ default │ 202303 │ 16666666 │ 138.36 MiB │ 10 │

└───────────┴───────────┴───────────┴────────────┴────────────┘

-- Let's change the storage policy, this command instant and changes only metadata of the table, and possible because the new storage policy and the old has the volume `default`.

alter table mydata modify setting storage_policy = 's3tiered';

0 rows in set. Elapsed: 0.057 sec.

straightforward (heavy) approach

-- Let's add TTL, it's a heavy command and takes a lot time and creates the performance impact, because it reads `D` column and moves parts to s3.

alter table mydata modify TTL D + interval 1 year to volume 's3cached';

0 rows in set. Elapsed: 140.661 sec.

┌─disk_name─┬─partition─┬─sum(rows)─┬─size───────┬─part_count─┐

│ s3disk │ 202201 │ 516666677 │ 4.01 GiB │ 13 │

│ s3disk │ 202202 │ 466666657 │ 3.64 GiB │ 13 │

│ s3disk │ 202203 │ 16666666 │ 138.36 MiB │ 10 │

│ default │ 202301 │ 516666677 │ 4.01 GiB │ 10 │

│ default │ 202302 │ 466666657 │ 3.64 GiB │ 10 │

│ default │ 202303 │ 16666666 │ 138.36 MiB │ 10 │

└───────────┴───────────┴───────────┴────────────┴────────────┘

gentle (manual) approach

-- alter modify TTL changes only metadata of the table and applied to only newly insterted data.

set materialize_ttl_after_modify=0;

alter table mydata modify TTL D + interval 1 year to volume 's3cached';

0 rows in set. Elapsed: 0.049 sec.

-- move data slowly partition by partition

alter table mydata move partition id '202201' to volume 's3cached';

0 rows in set. Elapsed: 49.410 sec.

alter table mydata move partition id '202202' to volume 's3cached';

0 rows in set. Elapsed: 36.952 sec.

alter table mydata move partition id '202203' to volume 's3cached';

0 rows in set. Elapsed: 4.808 sec.

-- data can be optimized to reduce number of parts before moving it to s3

optimize table mydata partition id '202301' final;

0 rows in set. Elapsed: 66.551 sec.

alter table mydata move partition id '202301' to volume 's3cached';

0 rows in set. Elapsed: 33.332 sec.

┌─disk_name─┬─partition─┬─sum(rows)─┬─size───────┬─part_count─┐

│ s3disk │ 202201 │ 516666677 │ 4.01 GiB │ 13 │

│ s3disk │ 202202 │ 466666657 │ 3.64 GiB │ 13 │

│ s3disk │ 202203 │ 16666666 │ 138.36 MiB │ 10 │

│ s3disk │ 202301 │ 516666677 │ 4.01 GiB │ 1 │ -- optimized partition

│ default │ 202302 │ 466666657 │ 3.64 GiB │ 13 │

│ default │ 202303 │ 16666666 │ 138.36 MiB │ 10 │

└───────────┴───────────┴───────────┴────────────┴────────────┘

S3 and ClickHouse® start time

Let’s create a table with 1000 parts and move them to s3.

CREATE TABLE test_s3( A Int64, S String, D Date)

ENGINE = MergeTree PARTITION BY D ORDER BY A

SETTINGS storage_policy = 's3tiered';

insert into test_s3 select number, number, toDate('2000-01-01') + intDiv(number,1e6) from numbers(1e9);

optimize table test_s3 final settings optimize_skip_merged_partitions = 1;

select disk_name, sum(rows), formatReadableSize(sum(bytes_on_disk)) size, count() part_count

from system.parts where table= 'test_s3' and active group by disk_name;

┌─disk_name─┬──sum(rows)─┬─size─────┬─part_count─┐

│ default │ 1000000000 │ 7.64 GiB │ 1000 │

└───────────┴────────────┴──────────┴────────────┘

alter table test_s3 modify ttl D + interval 1 year to disk 's3disk';

select disk_name, sum(rows), formatReadableSize(sum(bytes_on_disk)) size, count() part_count

from system.parts where table= 'test_s3' and active

group by disk_name;

┌─disk_name─┬─sum(rows)─┬─size─────┬─part_count─┐

│ default │ 755000000 │ 5.77 GiB │ 755 │

│ s3disk │ 245000000 │ 1.87 GiB │ 245 │

└───────────┴───────────┴──────────┴────────────┘

---- several minutes later ----

┌─disk_name─┬──sum(rows)─┬─size─────┬─part_count─┐

│ s3disk │ 1000000000 │ 7.64 GiB │ 1000 │

└───────────┴────────────┴──────────┴────────────┘

start time

:) select name, value from system.merge_tree_settings where name = 'max_part_loading_threads';

┌─name─────────────────────┬─value─────┐

│ max_part_loading_threads │ 'auto(4)' │

└──────────────────────────┴───────────┘

# systemctl stop clickhouse-server

# time systemctl start clickhouse-server / real 4m26.766s

# systemctl stop clickhouse-server

# time systemctl start clickhouse-server / real 4m24.263s

# cat /etc/clickhouse-server/config.d/max_part_loading_threads.xml

<?xml version="1.0"?>

<clickhouse>

<merge_tree>

<max_part_loading_threads>128</max_part_loading_threads>

</merge_tree>

</clickhouse>

# systemctl stop clickhouse-server

# time systemctl start clickhouse-server / real 0m11.225s

# systemctl stop clickhouse-server

# time systemctl start clickhouse-server / real 0m10.797s

<max_part_loading_threads>256</max_part_loading_threads>

# systemctl stop clickhouse-server

# time systemctl start clickhouse-server / real 0m8.474s

# systemctl stop clickhouse-server

# time systemctl start clickhouse-server / real 0m8.130s

1.5 - S3Disk

Settings

<clickhouse>

<storage_configuration>

<disks>

<s3>

<type>s3</type>

<endpoint>http://s3.us-east-1.amazonaws.com/BUCKET_NAME/test_s3_disk/</endpoint>

<access_key_id>ACCESS_KEY_ID</access_key_id>

<secret_access_key>SECRET_ACCESS_KEY</secret_access_key>

<skip_access_check>true</skip_access_check>

<send_metadata>true</send_metadata>

</s3>

</disks>

</storage_configuration>

</clickhouse>

skip_access_check — if true, it’s possible to use read only credentials with regular MergeTree table. But you would need to disable merges (

prefer_not_to_mergesetting) on s3 volume as well.send_metadata — if true, ClickHouse® will populate s3 object with initial part & file path, which allow you to recover metadata from s3 and make debug easier.

Restore metadata from S3

Default

Limitations:

- ClickHouse need RW access to this bucket

In order to restore metadata, you would need to create restore file in metadata_path/_s3_disk_name_ directory:

touch /var/lib/clickhouse/disks/_s3_disk_name_/restore

In that case ClickHouse would restore to the same bucket and path and update only metadata files in s3 bucket.

Custom

Limitations:

- ClickHouse needs RO access to the old bucket and RW to the new.

- ClickHouse will copy objects in case of restoring to a different bucket or path.

If you would like to change bucket or path, you need to populate restore file with settings in key=value format:

cat /var/lib/clickhouse/disks/_s3_disk_name_/restore

source_bucket=s3disk

source_path=vol1/

Links

2 - AggregateFunction(uniq, UUID) doubled after ClickHouse® upgrade

What happened

After ClickHouse® upgrade from version pre 21.6 to version after 21.6, count of unique UUID in AggregatingMergeTree tables nearly doubled in case of merging of data which was generated in different ClickHouse versions.

Why happened

In pull request which changed the internal representation of big integers data types (and UUID). SipHash64 hash-function used for uniq aggregation function for UUID data type was replaced with intHash64, which leads to different result for the same UUID value across different ClickHouse versions. Therefore, it results in doubling of counts, when uniqState created by different ClickHouse versions being merged together.

Related issue .

Solution

You need to replace any occurrence of uniqState(uuid) in MATERIALIZED VIEWs with uniqState(sipHash64(uuid)) and change data type for already saved data from AggregateFunction(uniq, UUID) to AggregateFunction(uniq, UInt64), because result data type of sipHash64 is UInt64.

-- On ClickHouse version 21.3

CREATE TABLE uniq_state

(

`key` UInt32,

`value` AggregateFunction(uniq, UUID)

)

ENGINE = MergeTree

ORDER BY key

INSERT INTO uniq_state SELECT

number % 10000 AS key,

uniqState(reinterpretAsUUID(number))

FROM numbers(1000000)

GROUP BY key

Ok.

0 rows in set. Elapsed: 0.404 sec. Processed 1.05 million rows, 8.38 MB (2.59 million rows/s., 20.74 MB/s.)

SELECT

key % 20,

uniqMerge(value)

FROM uniq_state

GROUP BY key % 20

┌─modulo(key, 20)─┬─uniqMerge(value)─┐

│ 0 │ 50000 │

│ 1 │ 50000 │

│ 2 │ 50000 │

│ 3 │ 50000 │

│ 4 │ 50000 │

│ 5 │ 50000 │

│ 6 │ 49999 │

│ 7 │ 50000 │

│ 8 │ 49999 │

│ 9 │ 50000 │

│ 10 │ 50000 │

│ 11 │ 50000 │

│ 12 │ 50000 │

│ 13 │ 50000 │

│ 14 │ 50000 │

│ 15 │ 50000 │

│ 16 │ 50000 │

│ 17 │ 50000 │

│ 18 │ 50000 │

│ 19 │ 50000 │

└─────────────────┴──────────────────┘

-- After upgrade of ClickHouse to 21.8

SELECT

key % 20,

uniqMerge(value)

FROM uniq_state

GROUP BY key % 20

┌─modulo(key, 20)─┬─uniqMerge(value)─┐

│ 0 │ 50000 │

│ 1 │ 50000 │

│ 2 │ 50000 │

│ 3 │ 50000 │

│ 4 │ 50000 │

│ 5 │ 50000 │

│ 6 │ 49999 │

│ 7 │ 50000 │

│ 8 │ 49999 │

│ 9 │ 50000 │

│ 10 │ 50000 │

│ 11 │ 50000 │

│ 12 │ 50000 │

│ 13 │ 50000 │

│ 14 │ 50000 │

│ 15 │ 50000 │

│ 16 │ 50000 │

│ 17 │ 50000 │

│ 18 │ 50000 │

│ 19 │ 50000 │

└─────────────────┴──────────────────┘

20 rows in set. Elapsed: 0.240 sec. Processed 10.00 thousand rows, 1.16 MB (41.72 thousand rows/s., 4.86 MB/s.)

CREATE TABLE uniq_state_2

ENGINE = MergeTree

ORDER BY key AS

SELECT *

FROM uniq_state

Ok.

0 rows in set. Elapsed: 0.128 sec. Processed 10.00 thousand rows, 1.16 MB (78.30 thousand rows/s., 9.12 MB/s.)

INSERT INTO uniq_state_2 SELECT

number % 10000 AS key,

uniqState(reinterpretAsUUID(number))

FROM numbers(1000000)

GROUP BY key

Ok.

0 rows in set. Elapsed: 0.266 sec. Processed 1.05 million rows, 8.38 MB (3.93 million rows/s., 31.48 MB/s.)

SELECT

key % 20,

uniqMerge(value)

FROM uniq_state_2

GROUP BY key % 20

┌─modulo(key, 20)─┬─uniqMerge(value)─┐

│ 0 │ 99834 │ <- Count of unique values nearly doubled.

│ 1 │ 100219 │

│ 2 │ 100128 │

│ 3 │ 100457 │

│ 4 │ 100272 │

│ 5 │ 100279 │

│ 6 │ 99372 │

│ 7 │ 99450 │

│ 8 │ 99974 │

│ 9 │ 99632 │

│ 10 │ 99562 │

│ 11 │ 100660 │

│ 12 │ 100439 │

│ 13 │ 100252 │

│ 14 │ 100650 │

│ 15 │ 99320 │

│ 16 │ 100095 │

│ 17 │ 99632 │

│ 18 │ 99540 │

│ 19 │ 100098 │

└─────────────────┴──────────────────┘

20 rows in set. Elapsed: 0.356 sec. Processed 20.00 thousand rows, 2.33 MB (56.18 thousand rows/s., 6.54 MB/s.)

CREATE TABLE uniq_state_3

ENGINE = MergeTree

ORDER BY key AS

SELECT *

FROM uniq_state

0 rows in set. Elapsed: 0.126 sec. Processed 10.00 thousand rows, 1.16 MB (79.33 thousand rows/s., 9.24 MB/s.)

-- Option 1, create separate column

ALTER TABLE uniq_state_3

ADD COLUMN `value_2` AggregateFunction(uniq, UInt64) DEFAULT unhex(hex(value));

ALTER TABLE uniq_state_3

UPDATE value_2 = value_2 WHERE 1;

SELECT *

FROM system.mutations

WHERE is_done = 0;

Ok.

0 rows in set. Elapsed: 0.008 sec.

INSERT INTO uniq_state_3 (key, value_2) SELECT

number % 10000 AS key,

uniqState(sipHash64(reinterpretAsUUID(number)))

FROM numbers(1000000)

GROUP BY key

Ok.

0 rows in set. Elapsed: 0.337 sec. Processed 1.05 million rows, 8.38 MB (3.11 million rows/s., 24.89 MB/s.)

SELECT

key % 20,

uniqMerge(value),

uniqMerge(value_2)

FROM uniq_state_3

GROUP BY key % 20

┌─modulo(key, 20)─┬─uniqMerge(value)─┬─uniqMerge(value_2)─┐

│ 0 │ 50000 │ 50000 │

│ 1 │ 50000 │ 50000 │

│ 2 │ 50000 │ 50000 │

│ 3 │ 50000 │ 50000 │

│ 4 │ 50000 │ 50000 │

│ 5 │ 50000 │ 50000 │

│ 6 │ 49999 │ 49999 │

│ 7 │ 50000 │ 50000 │

│ 8 │ 49999 │ 49999 │

│ 9 │ 50000 │ 50000 │

│ 10 │ 50000 │ 50000 │

│ 11 │ 50000 │ 50000 │

│ 12 │ 50000 │ 50000 │

│ 13 │ 50000 │ 50000 │

│ 14 │ 50000 │ 50000 │

│ 15 │ 50000 │ 50000 │

│ 16 │ 50000 │ 50000 │

│ 17 │ 50000 │ 50000 │

│ 18 │ 50000 │ 50000 │

│ 19 │ 50000 │ 50000 │

└─────────────────┴──────────────────┴────────────────────┘

20 rows in set. Elapsed: 0.768 sec. Processed 20.00 thousand rows, 4.58 MB (26.03 thousand rows/s., 5.96 MB/s.)

-- Option 2, modify column in-place with String as intermediate data type.

ALTER TABLE uniq_state_3

MODIFY COLUMN `value` String

Ok.

0 rows in set. Elapsed: 0.280 sec.

ALTER TABLE uniq_state_3

MODIFY COLUMN `value` AggregateFunction(uniq, UInt64)

Ok.

0 rows in set. Elapsed: 0.254 sec.

INSERT INTO uniq_state_3 (key, value) SELECT

number % 10000 AS key,

uniqState(sipHash64(reinterpretAsUUID(number)))

FROM numbers(1000000)

GROUP BY key

Ok.

0 rows in set. Elapsed: 0.554 sec. Processed 1.05 million rows, 8.38 MB (1.89 million rows/s., 15.15 MB/s.)

SELECT

key % 20,

uniqMerge(value),

uniqMerge(value_2)

FROM uniq_state_3

GROUP BY key % 20

┌─modulo(key, 20)─┬─uniqMerge(value)─┬─uniqMerge(value_2)─┐

│ 0 │ 50000 │ 50000 │

│ 1 │ 50000 │ 50000 │

│ 2 │ 50000 │ 50000 │

│ 3 │ 50000 │ 50000 │

│ 4 │ 50000 │ 50000 │

│ 5 │ 50000 │ 50000 │

│ 6 │ 49999 │ 49999 │

│ 7 │ 50000 │ 50000 │

│ 8 │ 49999 │ 49999 │

│ 9 │ 50000 │ 50000 │

│ 10 │ 50000 │ 50000 │

│ 11 │ 50000 │ 50000 │

│ 12 │ 50000 │ 50000 │

│ 13 │ 50000 │ 50000 │

│ 14 │ 50000 │ 50000 │

│ 15 │ 50000 │ 50000 │

│ 16 │ 50000 │ 50000 │

│ 17 │ 50000 │ 50000 │

│ 18 │ 50000 │ 50000 │

│ 19 │ 50000 │ 50000 │

└─────────────────┴──────────────────┴────────────────────┘

20 rows in set. Elapsed: 0.589 sec. Processed 30.00 thousand rows, 6.87 MB (50.93 thousand rows/s., 11.66 MB/s.)

SHOW CREATE TABLE uniq_state_3;

CREATE TABLE default.uniq_state_3

(

`key` UInt32,

`value` AggregateFunction(uniq, UInt64),

`value_2` AggregateFunction(uniq, UInt64) DEFAULT unhex(hex(value))

)

ENGINE = MergeTree

ORDER BY key

SETTINGS index_granularity = 8192

-- Option 3, CAST uniqState(UInt64) to String.

CREATE TABLE uniq_state_4

ENGINE = MergeTree

ORDER BY key AS

SELECT *

FROM uniq_state

Ok.

0 rows in set. Elapsed: 0.146 sec. Processed 10.00 thousand rows, 1.16 MB (68.50 thousand rows/s., 7.98 MB/s.)

INSERT INTO uniq_state_4 (key, value) SELECT

number % 10000 AS key,

CAST(uniqState(sipHash64(reinterpretAsUUID(number))), 'String')

FROM numbers(1000000)

GROUP BY key

Ok.

0 rows in set. Elapsed: 0.476 sec. Processed 1.05 million rows, 8.38 MB (2.20 million rows/s., 17.63 MB/s.)

SELECT

key % 20,

uniqMerge(value)

FROM uniq_state_4

GROUP BY key % 20

┌─modulo(key, 20)─┬─uniqMerge(value)─┐

│ 0 │ 50000 │

│ 1 │ 50000 │

│ 2 │ 50000 │

│ 3 │ 50000 │

│ 4 │ 50000 │

│ 5 │ 50000 │

│ 6 │ 49999 │

│ 7 │ 50000 │

│ 8 │ 49999 │

│ 9 │ 50000 │

│ 10 │ 50000 │

│ 11 │ 50000 │

│ 12 │ 50000 │

│ 13 │ 50000 │

│ 14 │ 50000 │

│ 15 │ 50000 │

│ 16 │ 50000 │

│ 17 │ 50000 │

│ 18 │ 50000 │

│ 19 │ 50000 │

└─────────────────┴──────────────────┘

20 rows in set. Elapsed: 0.281 sec. Processed 20.00 thousand rows, 2.33 MB (71.04 thousand rows/s., 8.27 MB/s.)

SHOW CREATE TABLE uniq_state_4;

CREATE TABLE default.uniq_state_4

(

`key` UInt32,

`value` AggregateFunction(uniq, UUID)

)

ENGINE = MergeTree

ORDER BY key

SETTINGS index_granularity = 8192

3 - Can not connect to my ClickHouse® server

Can not connect to my ClickHouse® server

Errors like “Connection reset by peer, while reading from socket”

Ensure that the

clickhouse-serveris runningsystemctl status clickhouse-serverIf server was restarted recently and don’t accept the connections after the restart - most probably it still just starting. During the startup sequence it need to iterate over all data folders in /var/lib/clickhouse-server In case if you have a very high number of folders there (usually caused by a wrong partitioning, or a very high number of tables / databases) that startup time can take a lot of time (same can happen if disk is very slow, for example NFS).

You can check that by looking for ‘Ready for connections’ line in

/var/log/clickhouse-server/clickhouse-server.log(Informationlog level needed)Ensure you use the proper port ip / interface?

Ensure you’re not trying to connect to secure port without tls / https or vice versa.

For

clickhouse-client- pay attention on host / port / secure flags.Ensure the interface you’re connecting to is the one which ClickHouse listens (by default ClickHouse listens only localhost).

Note: If you uncomment line

<listen_host>0.0.0.0</listen_host>only - ClickHouse will listen only ipv4 interfaces, while the localhost (used byclickhouse-client) may be resolved to ipv6 address. Andclickhouse-clientmay be failing to connect.How to check which interfaces / ports do ClickHouse listen?

sudo lsof -i -P -n | grep LISTEN echo listen_host sudo clickhouse-extract-from-config --config=/etc/clickhouse-server/config.xml --key=listen_host echo tcp_port sudo clickhouse-extract-from-config --config=/etc/clickhouse-server/config.xml --key=tcp_port echo tcp_port_secure sudo clickhouse-extract-from-config --config=/etc/clickhouse-server/config.xml --key=tcp_port_secure echo http_port sudo clickhouse-extract-from-config --config=/etc/clickhouse-server/config.xml --key=http_port echo https_port sudo clickhouse-extract-from-config --config=/etc/clickhouse-server/config.xml --key=https_portFor secure connection:

- ensure that server uses some certificate which can be validated by the client

- OR disable certificate checks on the client (UNSECURE)

Check for errors in /var/log/clickhouse-server/clickhouse-server.err.log ?

Is ClickHouse able to serve some trivial tcp / http requests from localhost?

curl 127.0.0.1:9200 curl 127.0.0.1:8123Check number of sockets opened by ClickHouse

sudo lsof -i -a -p $(pidof clickhouse-server) # or (adjust 9000 / 8123 ports if needed) netstat -tn 2>/dev/null | tail -n +3 | awk '{ printf("%s\t%s\t%s\t%s\t%s\t%s\n", $1, $2, $3, $4, $5, $6) }' | clickhouse-local -S "Proto String, RecvQ Int64, SendQ Int64, LocalAddress String, ForeignAddress String, State LowCardinality(String)" --query="SELECT * FROM table WHERE LocalAddress like '%:9000' FORMAT PrettyCompact" netstat -tn 2>/dev/null | tail -n +3 | awk '{ printf("%s\t%s\t%s\t%s\t%s\t%s\n", $1, $2, $3, $4, $5, $6) }' | clickhouse-local -S "Proto String, RecvQ Int64, SendQ Int64, LocalAddress String, ForeignAddress String, State LowCardinality(String)" --query="SELECT * FROM table WHERE LocalAddress like '%:8123' FORMAT PrettyCompact"ClickHouse has a limit of number of open connections (4000 by default).

Check also:

# system overall support limited number of connections it can handle netstat # you can also be reaching of of the process ulimits (Max open files) cat /proc/$(pidof -s clickhouse-server)/limitsCheck firewall / selinux rules (if used)

4 - cgroups and kubernetes cloud providers

Why my ClickHouse® is slow after upgrade to version 22.2 and higher?

The probable reason is that ClickHouse 22.2 started to respect cgroups (Respect cgroups limits in max_threads autodetection. #33342 (JaySon ).

You can observe that max_threads = 1

SELECT

name,

value

FROM system.settings

WHERE name = 'max_threads'

┌─name────────┬─value─────┐

│ max_threads │ 'auto(1)' │

└─────────────┴───────────┘

This makes ClickHouse to execute all queries with a single thread (normal behavior is half of available CPU cores, cores = 64, then ‘auto(32)’).

We observe this cgroups behavior with AWS EKS (Kubernetes) environment and Altinity ClickHouse Operator in case if requests.cpu and limits.cpu are not set for a resource.

Workaround

We suggest to set requests.cpu = half of available CPU cores, and limits.cpu = CPU cores.

For example in case of 16 CPU cores:

resources:

requests:

memory: ...

cpu: 8

limits:

memory: ....

cpu: 16

Then you should get a new result:

SELECT

name,

value

FROM system.settings

WHERE name = 'max_threads'

┌─name────────┬─value─────┐

│ max_threads │ 'auto(8)' │

└─────────────┴───────────┘

in depth

For some reason AWS EKS sets cgroup kernel parameters in case of empty requests.cpu & limits.cpu into these:

# cat /sys/fs/cgroup/cpu/cpu.cfs_quota_us

-1

# cat /sys/fs/cgroup/cpu/cpu.cfs_period_us

100000

# cat /sys/fs/cgroup/cpu/cpu.shares

2

This makes ClickHouse to set max_threads = 1 because of

cgroup_share = /sys/fs/cgroup/cpu/cpu.shares (2)

PER_CPU_SHARES = 1024

share_count = ceil( cgroup_share / PER_CPU_SHARES ) ---> ceil(2 / 1024) ---> 1

Fix

Incorrect calculation was fixed in https://github.com/ClickHouse/ClickHouse/pull/35815 and will work correctly on newer releases.

5 - Transforming ClickHouse logs to ndjson using Vector.dev

ClickHouse 22.8

Starting from 22.8 version, ClickHouse support writing logs in JSON format:

<?xml version="1.0"?>

<clickhouse>

<logger>

<!-- Structured log formatting:

You can specify log format(for now, JSON only). In that case, the console log will be printed

in specified format like JSON.

For example, as below:

{"date_time":"1650918987.180175","thread_name":"#1","thread_id":"254545","level":"Trace","query_id":"","logger_name":"BaseDaemon","message":"Received signal 2","source_file":"../base/daemon/BaseDaemon.cpp; virtual void SignalListener::run()","source_line":"192"}

To enable JSON logging support, just uncomment <formatting> tag below.

-->

<formatting>json</formatting>

</logger>

</clickhouse>

Transforming ClickHouse logs to ndjson using Vector.dev"

Installation of vector.dev

# arm64

wget https://packages.timber.io/vector/0.15.2/vector_0.15.2-1_arm64.deb

# amd64

wget https://packages.timber.io/vector/0.15.2/vector_0.15.2-1_amd64.deb

dpkg -i vector_0.15.2-1_*.deb

systemctl stop vector

mkdir /var/log/clickhouse-server-json

chown vector.vector /var/log/clickhouse-server-json

usermod -a -G clickhouse vector

vector config

# cat /etc/vector/vector.toml

data_dir = "/var/lib/vector"

[sources.clickhouse-log]

type = "file"

include = [ "/var/log/clickhouse-server/clickhouse-server.log" ]

fingerprinting.strategy = "device_and_inode"

message_start_indicator = '^\d+\.\d+\.\d+ \d+:\d+:\d+'

multi_line_timeout = 1000

[transforms.clickhouse-log-text]

inputs = [ "clickhouse-log" ]

type = "remap"

source = '''

. |= parse_regex!(.message, r'^(?P<timestamp>\d+\.\d+\.\d+ \d+:\d+:\d+\.\d+) \[\s?(?P<thread_id>\d+)\s?\] \{(?P<query_id>.*)\} <(?P<severity>\w+)> (?s)(?P<message>.*$)')

'''

[sinks.emit-clickhouse-log-json]

type = "file"

inputs = [ "clickhouse-log-text" ]

compression = "none"

path = "/var/log/clickhouse-server-json/clickhouse-server.%Y-%m-%d.ndjson"

encoding.only_fields = ["timestamp", "thread_id", "query_id", "severity", "message" ]

encoding.codec = "ndjson"

start

systemctl start vector

tail /var/log/clickhouse-server-json/clickhouse-server.2022-04-21.ndjson

{"message":"DiskLocal: Reserving 1.00 MiB on disk `default`, having unreserved 166.80 GiB.","query_id":"","severity":"Debug","thread_id":"283239","timestamp":"2022.04.21 13:43:21.164660"}

{"message":"MergedBlockOutputStream: filled checksums 202204_67118_67118_0 (state Temporary)","query_id":"","severity":"Trace","thread_id":"283239","timestamp":"2022.04.21 13:43:21.166810"}

{"message":"system.metric_log (e3365172-4c9b-441b-b803-756ae030e741): Renaming temporary part tmp_insert_202204_67118_67118_0 to 202204_171703_171703_0.","query_id":"","severity":"Trace","thread_id":"283239","timestamp":"2022.04.21 13:43:21.167226"}

....

sink logs into ClickHouse table

Be careful with logging ClickHouse messages into the same ClickHouse instance, it will cause endless recursive self-logging.

create table default.clickhouse_logs(

timestamp DateTime64(3),

host LowCardinality(String),

thread_id LowCardinality(String),

severity LowCardinality(String),

query_id String,

message String)

Engine = MergeTree

Partition by toYYYYMM(timestamp)

Order by (toStartOfHour(timestamp), host, severity, query_id);

create user vector identified by 'vector1234';

grant insert on default.clickhouse_logs to vector;

create settings profile or replace profile_vector settings log_queries=0 readonly TO vector;

[sinks.clickhouse-output-clickhouse]

inputs = ["clickhouse-log-text"]

type = "clickhouse"

host = "http://localhost:8123"

database = "default"

auth.strategy = "basic"

auth.user = "vector"

auth.password = "vector1234"

healthcheck = true

table = "clickhouse_logs"

encoding.timestamp_format = "unix"

buffer.type = "disk"

buffer.max_size = 104900000

buffer.when_full = "block"

request.in_flight_limit = 20

encoding.only_fields = ["host", "timestamp", "thread_id", "query_id", "severity", "message"]

select * from default.clickhouse_logs limit 10;

┌───────────────timestamp─┬─host───────┬─thread_id─┬─severity─┬─query_id─┬─message─────────────────────────────────────────────────────

│ 2022-04-21 19:08:13.443 │ clickhouse │ 283155 │ Debug │ │ HTTP-Session: 13e87050-7824-46b0-9bd5-29469a1b102f Authentic

│ 2022-04-21 19:08:13.443 │ clickhouse │ 283155 │ Debug │ │ HTTP-Session: 13e87050-7824-46b0-9bd5-29469a1b102f Authentic

│ 2022-04-21 19:08:13.443 │ clickhouse │ 283155 │ Debug │ │ HTTP-Session: 13e87050-7824-46b0-9bd5-29469a1b102f Creating

│ 2022-04-21 19:08:13.447 │ clickhouse │ 283155 │ Debug │ │ MemoryTracker: Peak memory usage (for query): 4.00 MiB.

│ 2022-04-21 19:08:13.447 │ clickhouse │ 283155 │ Debug │ │ HTTP-Session: 13e87050-7824-46b0-9bd5-29469a1b102f Destroyin

│ 2022-04-21 19:08:13.495 │ clickhouse │ 283155 │ Debug │ │ HTTP-Session: f7eb829f-7b3a-4c43-8a41-a2e6676177fb Authentic

│ 2022-04-21 19:08:13.495 │ clickhouse │ 283155 │ Debug │ │ HTTP-Session: f7eb829f-7b3a-4c43-8a41-a2e6676177fb Authentic

│ 2022-04-21 19:08:13.495 │ clickhouse │ 283155 │ Debug │ │ HTTP-Session: f7eb829f-7b3a-4c43-8a41-a2e6676177fb Creating

│ 2022-04-21 19:08:13.496 │ clickhouse │ 283155 │ Debug │ │ MemoryTracker: Peak memory usage (for query): 4.00 MiB.

│ 2022-04-21 19:08:13.496 │ clickhouse │ 283155 │ Debug │ │ HTTP-Session: f7eb829f-7b3a-4c43-8a41-a2e6676177fb Destroyin

└─────────────────────────┴────────────┴───────────┴──────────┴──────────┴─────────────────────────────────────────────────────────────

6 - Altinity Kubernetes Operator For ClickHouse®

Altinity Kubernetes Operator for ClickHouse® Documentation

https://github.com/Altinity/clickhouse-operator/blob/master/docs/README.md

7 - ClickHouse® and different filesystems

In general ClickHouse® should work with any POSIX-compatible filesystem.

- hard links and soft links support is mandatory.

- ClickHouse can use O_DIRECT mode to bypass the cache (and async io)

- ClickHouse can use renameat2 command for some atomic operations (not all the filesystems support that).

- depending on the schema and details of the usage the filesystem load can vary between the setup. The most natural load - is high throughput, with low or moderate IOPS.

- data is compressed in ClickHouse (LZ4 by default), while indexes / marks / metadata files - no. Enabling disk-level compression can sometimes improve the compression, but can affect read / write speed.

ext4

no issues, fully supported.

The minimum kernel version required is 3.15 (newer are recommended)

XFS

Performance issues reported by users, use on own risk. Old kernels are not recommended (4.0 or newer is recommended).

According to the users’ feedback, XFS behaves worse with ClickHouse under heavy load. We don’t have real proofs/benchmarks though, example reports:

- In GitHub there are complaints about XFS from Cloudflare.

- Recently my colleague discovered that two of ClickHouse servers perform worse in a cluster than others and they found that they accidentally set up those servers with XFS instead of Ext4.

- in the system journal you can sometimes see reports like ’task XYZ blocked for more than 120 seconds’ and stack trace pointing to XFS code (example: https://gist.github.com/filimonov/85b894268f978c2ccc18ea69bae5adbd )

- system goes to 99% io kernel under load sometimes.

- we have XFS, sometimes ClickHouse goes to “sleep” because XFS daemon is doing smth unknown

Maybe the above problem can be workaround by some tuning/settings, but so far we do not have a working and confirmed way to do this.

ZFS

Limitations exist, extra tuning may be needed, and having more RAM is recommended. Old kernels are not recommended.

Memory usage control - ZFS adaptive replacement cache (ARC) can take a lot of RAM. It can be the reason of out-of-memory issues when memory is also requested by the ClickHouse.

- It seems that the most important thing is zfs_arc_max - you just need to limit the maximum size of the ARC so that the sum of the maximum size of the arc + the CH itself does not exceed the size of the available RAM. For example, we set a limit of 80% RAM for ClickHouse and 10% for ARC. 10% will remain for the system and other applications

Tuning:

- another potentially interesting setting is primarycache=metadata, see benchmark example: https://www.ikus-soft.com/en/blog/2018-05-23-proxmox-primarycache-all-metadata/

- examples of tuning ZFS for MySQL https://wiki.freebsd.org/ZFSTuningGuide - perhaps some of this can also be useful (atime, recordsize) but everything needs to be carefully checked with benchmarks (I have no way).

- best practices: https://efim360.ru/zfs-best-practices-guide/

important note: In versions before 2.2 ZFS does not support the renameat2 command, which is used by the Atomic database engine, and

therefore some of the Atomic functionality will not be available.

In old versions of ClickHouse, you can face issues with the O_DIRECT mode.

Also there is a well-known (and controversial) Linus Torvalds opinion: “Don’t Use ZFS on Linux” [1] , [2] , [3] .

BTRFS

Not enough information. Some users report performance improvement for their use case.

ReiserFS

Not enough information.

Lustre

There are reports that some people successfully use it in their setups. A fast network is required.

There were some reports about data damage on the disks on older ClickHouse versions, which could be caused by the issues with O_DIRECT or async io support on Lustre.

NFS (and EFS)

According to the reports - it works, throughput depends a lot on the network speed. IOPS / number of file operations per seconds can be super low (due to the locking mechanism).

https://github.com/ClickHouse/ClickHouse/issues/31113

MooseFS

There are installations using that. No extra info.

GlusterFS

There are installations using that. No extra info.

Ceph

There are installations using that. Some information: https://github.com/ClickHouse/ClickHouse/issues/8315

8 - ClickHouse® Access Control and Account Management (RBAC)

Documentation https://clickhouse.com/docs/en/operations/access-rights/

Enable ClickHouse® RBAC and create admin user

Create an admin user like (root in MySQL or postgres in PostgreSQL) to do the DBA/admin ops in the user.xml file and set the access management property for the admin user

<clickhouse>

<users>

<default>

....

</default>

<admin>

<!--

Password could be specified in plaintext or in SHA256 (in hex format).

If you want to specify password in plaintext (not recommended), place it in 'password' element.

Example: <password>qwerty</password>.

Password could be empty.

If you want to specify SHA256, place it in 'password_sha256_hex' element.

Example: <password_sha256_hex>65e84be33532fb784c48129675f9eff3a682b27168c0ea744b2cf58ee02337c5</password_sha256_hex>

Restrictions of SHA256: impossibility to connect to ClickHouse using MySQL JS client (as of July 2019).

If you want to specify double SHA1, place it in 'password_double_sha1_hex' element.

Example: <password_double_sha1_hex>e395796d6546b1b65db9d665cd43f0e858dd4303</password_double_sha1_hex>

-->

<password></password>

<networks>

<ip>::/0</ip>

</networks>

<!-- Settings profile for user. -->

<profile>default</profile>

<!-- Quota for user. -->

<quota>default</quota>

<!-- Set This parameter to Enable RBAC

Admin user can create other users and grant rights to them. -->

<access_management>1</access_management>

</admin>

...

</clickhouse>

default user

As default is used for many internal and background operations, so it is not convenient to set it up with a password, because you would have to change it in many configs/parts. Best way to secure the default user is only allow localhost or trusted network connections like this in users.xml:

<clickhouse>

<users>

<default>

......

<networks>

<ip>127.0.0.1/8</ip>

<ip>10.10.10.0/24</ip>

</networks>

......

</default>

</clickhouse>

replication user

The replication user is defined by interserver_http_credential tag. It does not relate to a ClickHouse client credentials configuration. If this tag is ommited then authentication is not used during replication. Ports 9009 and 9010(tls) provide low-level data access between servers. This ports should not be accessible from untrusted networks. You can specify credentials for authentication between replicas. This is required when interserver_https_port is accessible from untrusted networks. You can do so by defining user and password to the interserver credentials. Then replication protocol will use basic access authentication when connecting by HTTP/HTTPS to other replicas:

<interserver_http_credentials>

<user>replication</user>

<password>password</password>

</interserver_http_credentials>

Create users and roles

Now we can setup users/roles using a generic best-practice approach for RBAC from other databases, like using roles, granting permissions to roles, creating users for different applications, etc…

Example: 3 roles (dba, dashboard_ro, ingester_rw)

create role dba on cluster '{cluster}';

grant all on *.* to dba on cluster '{cluster}';

create user `user1` identified by 'pass1234' on cluster '{cluster}';

grant dba to user1 on cluster '{cluster}';

create role dashboard_ro on cluster '{cluster}';

grant select on default.* to dashboard_ro on cluster '{cluster}';

grant dictGet on *.* to dashboard_ro on cluster '{cluster}';

create settings profile or replace profile_dashboard_ro on cluster '{cluster}'

settings max_concurrent_queries_for_user = 10 READONLY,

max_threads = 16 READONLY,

max_memory_usage_for_user = '30G' READONLY,

max_memory_usage = '30G' READONLY,

max_execution_time = 60 READONLY,

max_rows_to_read = 1000000000 READONLY,

max_bytes_to_read = '5000G' READONLY

TO dashboard_ro;

create user `dash1` identified by 'pass1234' on cluster '{cluster}';

grant dashboard_ro to dash1 on cluster '{cluster}';

create role ingester_rw on cluster '{cluster}';

grant select,insert on default.* to ingester_rw on cluster '{cluster}';

create settings profile or replace profile_ingester_rw on cluster '{cluster}'

settings max_concurrent_queries_for_user = 40 READONLY, -- user can run 40 queries (select, insert ...) simultaneously

max_threads = 10 READONLY, -- each query can use up to 10 cpu (READONLY means user cannot override a value)

max_memory_usage_for_user = '30G' READONLY, -- all queries of the user can use up to 30G RAM

max_memory_usage = '25G' READONLY, -- each query can use up to 25G RAM

max_execution_time = 200 READONLY, -- each query can executes no longer 200 seconds

max_rows_to_read = 1000000000 READONLY, -- each query can read up to 1 billion rows

max_bytes_to_read = '5000G' READONLY -- each query can read up to 5 TB from a MergeTree

TO ingester_rw;

create user `ingester_app1` identified by 'pass1234' on cluster '{cluster}';

grant ingester_rw to ingester_app1 on cluster '{cluster}';

check

$ clickhouse-client -u dash1 --password pass1234

create table test ( A Int64) Engine=Log;

DB::Exception: dash1: Not enough privileges

$ clickhouse-client -u user1 --password pass1234

create table test ( A Int64) Engine=Log;

Ok.

drop table test;

Ok.

$ clickhouse-client -u ingester_app1 --password pass1234

select count() from system.numbers limit 1000000000000;

DB::Exception: Received from localhost:9000. DB::Exception: Limit for rows or bytes to read exceeded, max rows: 1.00 billion

clean up

show profiles;

┌─name─────────────────┐

│ default │

│ profile_dashboard_ro │

│ profile_ingester_rw │

│ readonly │

└──────────────────────┘

drop profile if exists readonly on cluster '{cluster}';

drop profile if exists profile_dashboard_ro on cluster '{cluster}';

drop profile if exists profile_ingester_rw on cluster '{cluster}';

show roles;

┌─name─────────┐

│ dashboard_ro │

│ dba │

│ ingester_rw │

└──────────────┘

drop role if exists dba on cluster '{cluster}';

drop role if exists dashboard_ro on cluster '{cluster}';

drop role if exists ingester_rw on cluster '{cluster}';

show users;

┌─name──────────┐

│ dash1 │

│ default │

│ ingester_app1 │

│ user1 │

└───────────────┘

drop user if exists ingester_app1 on cluster '{cluster}';

drop user if exists user1 on cluster '{cluster}';

drop user if exists dash1 on cluster '{cluster}';

9 - Client Timeouts

Timeout settings are related to the client, server, and network. They can be tuned to solve sporadic timeout issues.

It’s important to understand that network devices (routers, NATs, load balancers ) could have their own timeouts. Sometimes, they won’t respect TCP keep-alive and close the session due to inactivity. Only application-level keepalives could prevent TCP sessions from closing.

Below are the settings that will work only if you set them in the default user profile. The problem is that they should be applied before the connection happens. And if you send them with a query/connection, it’s already too late:

SETTINGS

receive_timeout = 3600,

send_timeout = 3600,

http_receive_timeout = 3600,

http_send_timeout = 3600,

http_connection_timeout = 2

Those can be set on the query level (but in the profile, too):

SETTINGS

tcp_keep_alive_timeout = 3600,

--!!!send_progress_in_http_headers = 1,

http_headers_progress_interval_ms = 10000,

http_wait_end_of_query = 1,

max_execution_time = 3600

https://clickhouse.com/docs/en/integrations/language-clients/javascript#keep-alive-nodejs-only

send_progress_in_http_headers will not be applied in this way because here we can configure the JDBC driver’s client options only (this

), but there is an option called custom_settings (this

) that will apply custom ch query settings for every query before the actual connection is created. The correct JDBC connection string will look like this:

jdbc:clickhouse://"${clickhouse.host}"/"${clickhouse.db}"?ssl=true&socket_timeout=3600000&socket_keepalive=true&custom_settings=send_progress_in_http_headers=1

Description

http_send_timeout & send_timeout: The timeout for sending data to the socket. If the server takes longer than this value to send data, the connection will be terminated (i.e., when the server pushes data to the client, and the client is not reading that for some reason).http_receive_timeout & receive_timeout:The timeout for receiving data from the socket. If the server takes longer than this value to receive the entire request from the client, the connection will be terminated. This setting ensures that the server is not kept waiting indefinitely for slow or unresponsive clients (i.e., the server tries to get some data from the client, but the client does not send anything).http_connection_timeout & connect_timeout: Defines how long ClickHouse should wait when it connects to another server. If the connection cannot be established within this time frame, it will be terminated. This does not impact the clients which connect to ClickHouse using HTTP (it only matters when ClickHouse works as a TCP/HTTP client).keep_alive_timeout: This is for ‘Connection: keep-alive’ in HTTP 1.1, only for HTTP. It defines how long ClickHouse can wait for the next request in the same connection to arrive after serving the previous one. It does not lead to any SOCKET_TIMEOUT exception, just closes the socket if the client doesn’t start a new request after that time.

sync_request_timeout– timeout for server ping. Defaults to 5 seconds.

In some cases, if the data sync request time out, it may be caused by many different reasons, basically it shouldn’t take more than 5 seconds for synchronous request-result protocol call (like Ping or TableStatus) in most of the normal circumstances, thus if time out setting too long, eg. 5 minutes or longer than that, then you will run into more overall performance issues. This is not good for any application on the server.

How to check the current timeouts:

SELECT

name,

value,

changed,

description

FROM system.settings

WHERE (name ILIKE '%send_timeout%') OR (name ILIKE '%receive_timeout%') OR (name ILIKE '%keep_alive%') OR (name ILIKE '%_http_headers') OR (name ILIKE 'http_headers_progres_%') OR (name ILIKE 'http_connection_%')

10 - Compatibility layer for the Altinity Kubernetes Operator for ClickHouse®

It’s possible to expose clickhouse-server metrics in the style used by the Altinity Kubernetes Operator for ClickHouse®. It’s for the clickhouse-operator grafana dashboard.

CREATE VIEW system.operator_compatible_metrics

(

`name` String,

`value` Float64,

`help` String,

`labels` Map(String, String),

`type` String

) AS

SELECT

concat('chi_clickhouse_event_', event) AS name,

CAST(value, 'Float64') AS value,

description AS help,

map('hostname', hostName()) AS labels,

'counter' AS type

FROM system.events

UNION ALL

SELECT

concat('chi_clickhouse_metric_', metric) AS name,

CAST(value, 'Float64') AS value,

description AS help,

map('hostname', hostName()) AS labels,

'gauge' AS type

FROM system.metrics

UNION ALL

SELECT

concat('chi_clickhouse_metric_', metric) AS name,

value,

'' AS help,

map('hostname', hostName()) AS labels,

'gauge' AS type

FROM system.asynchronous_metrics

UNION ALL

SELECT

'chi_clickhouse_metric_MemoryDictionaryBytesAllocated' AS name,

CAST(sum(bytes_allocated), 'Float64') AS value,

'Memory size allocated for dictionaries' AS help,

map('hostname', hostName()) AS labels,

'gauge' AS type

FROM system.dictionaries

UNION ALL

SELECT

'chi_clickhouse_metric_LongestRunningQuery' AS name,

CAST(max(elapsed), 'Float64') AS value,

'Longest running query time' AS help,

map('hostname', hostName()) AS labels,

'gauge' AS type

FROM system.processes

UNION ALL

WITH

['chi_clickhouse_table_partitions', 'chi_clickhouse_table_parts', 'chi_clickhouse_table_parts_bytes', 'chi_clickhouse_table_parts_bytes_uncompressed', 'chi_clickhouse_table_parts_rows', 'chi_clickhouse_metric_DiskDataBytes', 'chi_clickhouse_metric_MemoryPrimaryKeyBytesAllocated'] AS names,

[uniq(partition), count(), sum(bytes), sum(data_uncompressed_bytes), sum(rows), sum(bytes_on_disk), sum(primary_key_bytes_in_memory_allocated)] AS values,

arrayJoin(arrayZip(names, values)) AS tpl

SELECT

tpl.1 AS name,

CAST(tpl.2, 'Float64') AS value,

'' AS help,

map('database', database, 'table', table, 'active', toString(active), 'hostname', hostName()) AS labels,

'gauge' AS type

FROM system.parts

GROUP BY

active,

database,

table

UNION ALL

WITH

['chi_clickhouse_table_mutations', 'chi_clickhouse_table_mutations_parts_to_do'] AS names,

[CAST(count(), 'Float64'), CAST(sum(parts_to_do), 'Float64')] AS values,

arrayJoin(arrayZip(names, values)) AS tpl

SELECT

tpl.1 AS name,

tpl.2 AS value,

'' AS help,

map('database', database, 'table', table, 'hostname', hostName()) AS labels,

'gauge' AS type

FROM system.mutations

WHERE is_done = 0

GROUP BY

database,

table

UNION ALL

WITH if(coalesce(reason, 'unknown') = '', 'detached_by_user', coalesce(reason, 'unknown')) AS detach_reason

SELECT

'chi_clickhouse_metric_DetachedParts' AS name,

CAST(count(), 'Float64') AS value,

'' AS help,

map('database', database, 'table', table, 'disk', disk, 'hostname', hostName()) AS labels,

'gauge' AS type

FROM system.detached_parts

GROUP BY

database,

table,

disk,

reason

ORDER BY name ASC

nano /etc/clickhouse-server/config.d/operator_metrics.xml

<clickhouse>

<http_handlers>

<rule>

<url>/metrics</url>

<methods>POST,GET</methods>

<handler>

<type>predefined_query_handler</type>

<query>SELECT * FROM system.operator_compatible_metrics FORMAT Prometheus</query>

<content_type>text/plain; charset=utf-8</content_type>

</handler>

</rule>

<defaults/>

<rule>

<url>/</url>

<methods>POST,GET</methods>

<headers><pragma>no-cache</pragma></headers>

<handler>

<type>dynamic_query_handler</type>

<query_param_name>query</query_param_name>

</handler>

</rule>

</http_handlers>

</clickhouse>

curl http://localhost:8123/metrics

# HELP chi_clickhouse_metric_Query Number of executing queries

# TYPE chi_clickhouse_metric_Query gauge

chi_clickhouse_metric_Query{hostname="LAPTOP"} 1

# HELP chi_clickhouse_metric_Merge Number of executing background merges

# TYPE chi_clickhouse_metric_Merge gauge

chi_clickhouse_metric_Merge{hostname="LAPTOP"} 0

# HELP chi_clickhouse_metric_PartMutation Number of mutations (ALTER DELETE/UPDATE)

# TYPE chi_clickhouse_metric_PartMutation gauge

chi_clickhouse_metric_PartMutation{hostname="LAPTOP"} 0

11 - How to convert uniqExact states to approximate uniq functions states

uniqExactState

uniqExactState is stored in two parts: a count of values in LEB128 format + list values without a delimiter.

In our case, the value is sipHash128 of strings passed to uniqExact function.

┌─hex(uniqExactState(toString(arrayJoin([1]))))─┐

│ 01E2756D8F7A583CA23016E03447724DE7 │

└───────────────────────────────────────────────┘

01 E2756D8F7A583CA23016E03447724DE7

^ ^

LEB128 sipHash128

┌─hex(uniqExactState(toString(arrayJoin([1, 2]))))───────────────────┐

│ 024809CB4528E00621CF626BE9FA14E2BFE2756D8F7A583CA23016E03447724DE7 │

└────────────────────────────────────────────────────────────────────┘

02 4809CB4528E00621CF626BE9FA14E2BF E2756D8F7A583CA23016E03447724DE7

^ ^ ^

LEB128 sipHash128 sipHash128

So, our task is to find how we can generate such values by ourself.

In case of String data type, it just the simple sipHash128 function.

┌─hex(sipHash128(toString(2)))─────┬─hex(sipHash128(toString(1)))─────┐

│ 4809CB4528E00621CF626BE9FA14E2BF │ E2756D8F7A583CA23016E03447724DE7 │

└──────────────────────────────────┴──────────────────────────────────┘

The second task: it needs to read a state and split it into an array of values.

Luckily for us, ClickHouse® use the exact same serialization (LEB128 + list of values) for Arrays (in this case if uniqExactState and Array are serialized into RowBinary format).

We need one a helper – UDF function to do that conversion:

cat /etc/clickhouse-server/pipe_function.xml

<clickhouse>

<function>

<type>executable</type>

<execute_direct>0</execute_direct>

<name>pipe</name>

<return_type>Array(FixedString(16))</return_type>

<argument>

<type>String</type>

</argument>

<format>RowBinary</format>

<command>cat</command>

<send_chunk_header>0</send_chunk_header>

</function>

</clickhouse>

This UDF – pipe converts uniqExactState to the Array(FixedString(16)).

┌─arrayMap(x -> hex(x), pipe(uniqExactState(toString(arrayJoin([1, 2])))))──────────────┐

│ ['4809CB4528E00621CF626BE9FA14E2BF','E2756D8F7A583CA23016E03447724DE7'] │

└───────────────────────────────────────────────────────────────────────────────────────┘

And here is the full example, how you can convert uniqExactState(string) to uniqState(string) or uniqCombinedState(string) using pipe UDF and arrayReduce('func', [..]).

-- Generate demo with random data, uniqs are stored as heavy uniqExact

CREATE TABLE aggregates

(

`id` UInt32,

`uniqExact` AggregateFunction(uniqExact, String)

)

ENGINE = AggregatingMergeTree

ORDER BY id as

SELECT

number % 10000 AS id,

uniqExactState(toString(number))

FROM numbers(10000000)

GROUP BY id;

0 rows in set. Elapsed: 2.042 sec. Processed 10.01 million rows, 80.06 MB (4.90 million rows/s., 39.21 MB/s.)

-- Let's add a new columns to store optimized, approximate uniq & uniqCombined

ALTER TABLE aggregates

ADD COLUMN `uniq` AggregateFunction(uniq, FixedString(16))

default arrayReduce('uniqState', pipe(uniqExact)),

ADD COLUMN `uniqCombined` AggregateFunction(uniqCombined, FixedString(16))

default arrayReduce('uniqCombinedState', pipe(uniqExact));

-- Materialize defaults in the new columns

ALTER TABLE aggregates UPDATE uniqCombined = uniqCombined, uniq = uniq

WHERE 1 settings mutations_sync=2;

-- Let's reset defaults to remove the dependancy of the UDF from our table

ALTER TABLE aggregates

modify COLUMN `uniq` remove default,

modify COLUMN `uniqCombined` remove default;

-- Alternatively you can populate data in the new columns directly without using DEFAULT columns

-- ALTER TABLE aggregates UPDATE

-- uniqCombined = arrayReduce('uniqCombinedState', pipe(uniqExact)),

-- uniq = arrayReduce('uniqState', pipe(uniqExact))

-- WHERE 1 settings mutations_sync=2;

-- Check results, results are slighty different, because uniq & uniqCombined are approximate functions

SELECT

id % 20 AS key,

uniqExactMerge(uniqExact),

uniqCombinedMerge(uniqCombined),

uniqMerge(uniq)

FROM aggregates

GROUP BY key

┌─key─┬─uniqExactMerge(uniqExact)─┬─uniqCombinedMerge(uniqCombined)─┬─uniqMerge(uniq)─┐

│ 0 │ 500000 │ 500195 │ 500455 │

│ 1 │ 500000 │ 502599 │ 501549 │

│ 2 │ 500000 │ 498058 │ 504428 │

│ 3 │ 500000 │ 499748 │ 500195 │

│ 4 │ 500000 │ 500791 │ 500836 │

│ 5 │ 500000 │ 502430 │ 497558 │

│ 6 │ 500000 │ 500262 │ 501785 │

│ 7 │ 500000 │ 501514 │ 495758 │

│ 8 │ 500000 │ 500121 │ 498597 │

│ 9 │ 500000 │ 502173 │ 500455 │

│ 10 │ 500000 │ 499144 │ 498386 │

│ 11 │ 500000 │ 500525 │ 503139 │

│ 12 │ 500000 │ 503624 │ 497103 │

│ 13 │ 500000 │ 499986 │ 497992 │

│ 14 │ 500000 │ 502027 │ 494833 │

│ 15 │ 500000 │ 498831 │ 500983 │

│ 16 │ 500000 │ 501103 │ 500836 │

│ 17 │ 500000 │ 499409 │ 496791 │

│ 18 │ 500000 │ 501641 │ 502991 │

│ 19 │ 500000 │ 500648 │ 500881 │

└─────┴───────────────────────────┴─────────────────────────────────┴─────────────────┘

20 rows in set. Elapsed: 2.312 sec. Processed 10.00 thousand rows, 7.61 MB (4.33 thousand rows/s., 3.29 MB/s.)

Now, lets repeat the same insert, but in that case we will also populate uniq & uniqCombined with values converted via sipHash128 function.

If we did everything right, uniq counts will not change, because we inserted the exact same values.

INSERT INTO aggregates SELECT

number % 10000 AS id,

uniqExactState(toString(number)),

uniqState(sipHash128(toString(number))),

uniqCombinedState(sipHash128(toString(number)))

FROM numbers(10000000)

GROUP BY id;

0 rows in set. Elapsed: 5.386 sec. Processed 10.01 million rows, 80.06 MB (1.86 million rows/s., 14.86 MB/s.)

SELECT

id % 20 AS key,

uniqExactMerge(uniqExact),

uniqCombinedMerge(uniqCombined),

uniqMerge(uniq)

FROM aggregates

GROUP BY key

┌─key─┬─uniqExactMerge(uniqExact)─┬─uniqCombinedMerge(uniqCombined)─┬─uniqMerge(uniq)─┐

│ 0 │ 500000 │ 500195 │ 500455 │

│ 1 │ 500000 │ 502599 │ 501549 │

│ 2 │ 500000 │ 498058 │ 504428 │

│ 3 │ 500000 │ 499748 │ 500195 │